Testing Guardrails

Testing guardrails is essential to ensure they effectively identify real risks while minimizing false positives. This guide explains the testing workflows available in Agent Ops Director.

Understanding Guardrail Testing

Guardrail testing allows you to:

- Verify detection rules are working as expected

- Identify false positives before deploying to production

- Measure detection accuracy and performance

- Create reproducible test cases for guardrail validation

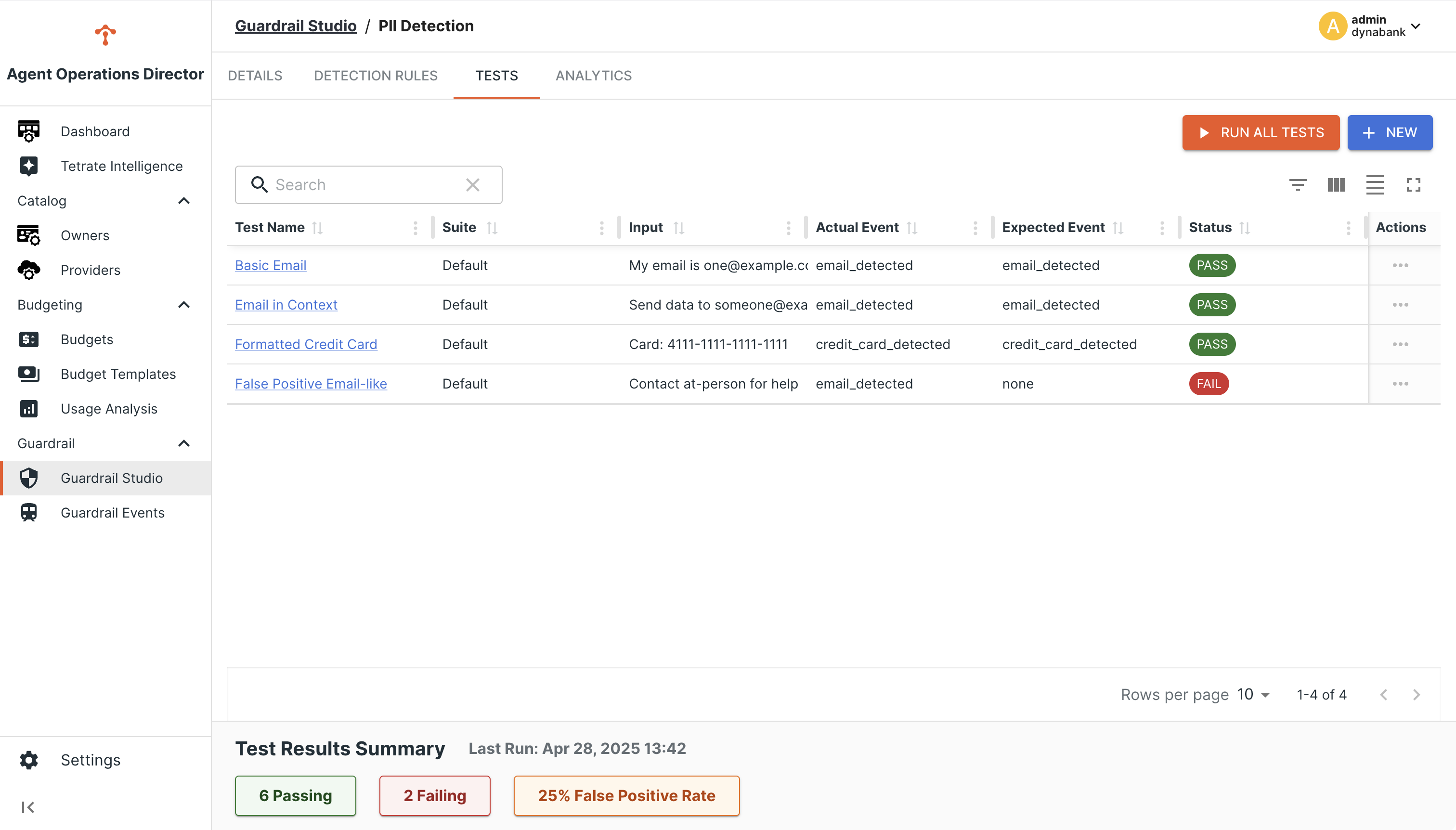

The Test Tab Interface

Each guardrail in Agent Ops Director includes a dedicated testing interface:

- Navigate to the Guardrail Studio section

- Select the guardrail you want to test

- Click on the Tests tab to access the testing interface

Creating Test Cases

To create a test case:

- Click + Add Test Case in the Tests tab

- Configure the test case:

- Test Name: A descriptive name for the test

- Test Input: The sample content to test against the guardrail

- Expected Event: The event you expect the guardrail to trigger (or "none" if no event should trigger)

- Click Save to add the test case

Consider creating both positive test cases (should trigger the guardrail) and negative test cases (should not trigger the guardrail).

Running Tests

You can run tests individually or as a batch:

- Single Test: Click the "Run" button next to a specific test case

- All Tests: Click the Run All Tests button to execute all test cases for the guardrail

After running tests, each test case will show either:

- PASS: The actual event matched the expected event

- FAIL: The actual event didn't match the expected event

Interpreting Test Results

The test results provide valuable insights:

- Test Summary: Shows passing and failing tests at a glance

- False Positive Rate: The percentage of test cases incorrectly identified

- Expected vs. Actual: For each test, compare what was expected with what actually happened

Use these insights to refine your detection rules for better accuracy.

Best Practices for Testing

- Test Edge Cases: Include unusual but valid inputs that might cause false positives

- Include Real-World Examples: Use anonymized examples from your actual usage

- Maintain Test Coverage: Update test cases when adding or modifying rules

- Regular Retesting: Periodically rerun tests to ensure continued effectiveness

- Document Test Cases: Add clear descriptions of what each test is validating

Using Test Results to Improve Guardrails

After testing:

- Review all failing tests to understand why they failed

- Update detection rules to address false positives or missed detections

- Add additional test cases to cover newly discovered edge cases

- Rerun tests to verify improvements

Next Steps

- Learn how to monitor guardrail analytics in production

- Understand how to handle false positives when they occur

- Explore how to customize guardrails for your organization's needs