Prepare the Cluster

The Platform Owner ("Platform") will prepare a cluster with the following steps:

Deploy TSE/TSB

Begin by deploying TSE or TSB, and onboarding the intended Workload clusters.

Enable Strict (Zero-Trust) Security

Configure the platform to follow 'require-mTLS' and 'deny-all' Zero-Trust security policies.

Create Kubernetes Namespaces

Create and label the namespaces in each cluster that will be used by the App Owner to host services and applications.

Create Tetrate Workspaces

Create the Tetrate Workspaces and associated configuration that will be used to manage the behavior of services within the namespaces.

Deploy Ingress Gateways

Where needed, deploy Ingress Gateways in Workspaces that will host services that should be published for external access.

Enable GitOps Integration

Enable GitOps integration so that the Application Owner users can interact with the platform without needing Tetrate privileged access.

Enable Additional Integrations

Enable GitOps integration so that the Application Owner users can interact with the platform without needing Tetrate privileged access.

Platform: Deploy TSE/TSB

Follow the product instructions to deploy the TSE or TSB management plane, and then to onboard the intended Workload clusters.

Make sure to install the required add-ons and meet the necessary prerequisites.

Platform: Enable Strict Security

You should use TSE/TSB to configure your platform to function in a zero-trust manner. Specifically:

- All communications between components and app-owner services are secured using mTLS. This means that external third-parties such as other services in the cluster or with access to the data path cannot read transactions, modify transactions or impersonate clients or services.

- All communication is denied by default. The platform owner must explicitly open-up the required communication paths. This means that only explicitly-allowed communications are permitted.

Strict Security

- Tetrate Service Express

- Tetrate Service Bridge

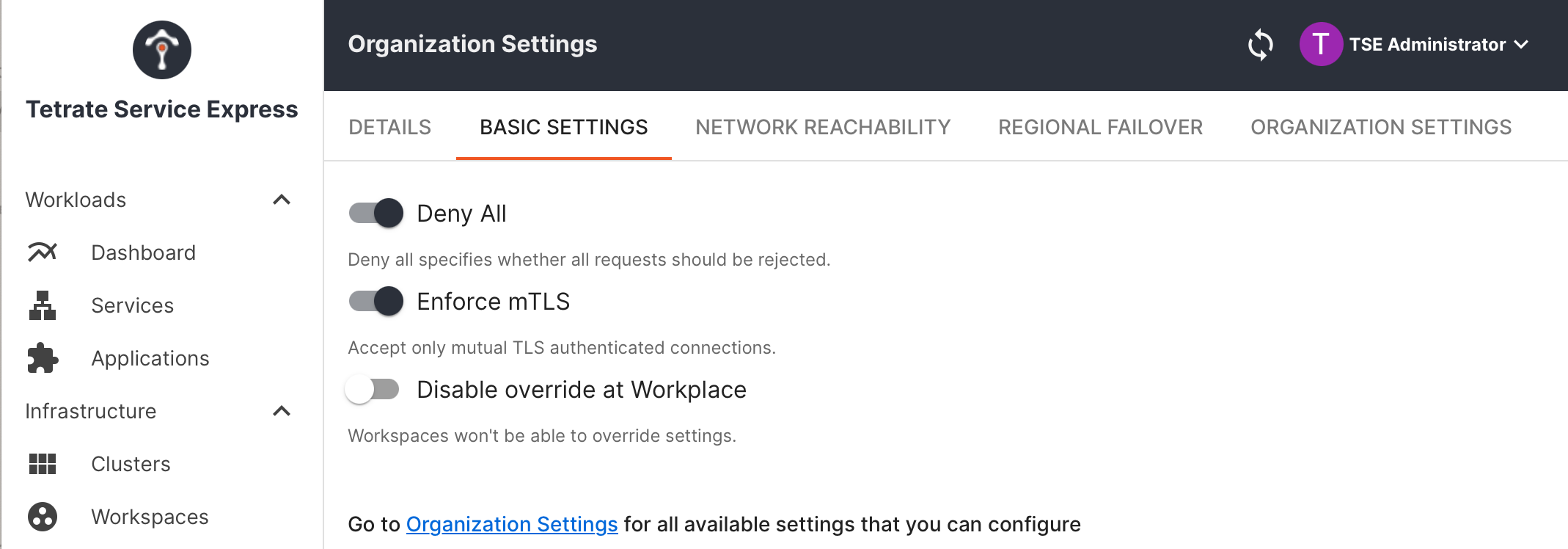

Navigate to Settings > Basic Settings. Ensure that Enforce mTLS and Deny-All are both enabled:

TSE Security Settings TSE Security Settings |

|---|

Alternatively, you can configure strict security using the Tetrate APIs, by following the Tetrate Service Bridge instructions.

In Tetrate products, the default settings are associated with the top-level Organization, which is user-definable in TSB and set to the value tse in TSE.

You'll find the security settings in the OrganizationSetting.spec.defaultSecuritySetting stanza in the OrganizationSetting named default:

tctl get os -o yaml

These settings can be further over-ridden on a per-tenant or per-workspace basis (note that TSE has a single tse tenant, whereas TSB supports multiple user-defined tenants).

- To require mTLS by default, set authenticationSettings.trafficMode to REQUIRED

- To declare Deny-All by default, set authorization.rules.denyAll to true

- To prevent child resources from overriding these settings, set propagationStrategy to STRICTER (this step is not necessary)

spec:

defaultSecuritySetting:

authenticationSettings:

trafficMode: REQUIRED

authorization:

mode: RULES

rules:

denyAll: true

propagationStrategy: REPLACE

These settings are described in the TSB API reference.

You will later override these settings selectively to allow permitted flows.

Platform: Create Kubernetes Namespaces

Kubernetes' core unit of isolation is the namespace. Many deployments use very fine-grained namespaces in order to enforce a high level of control and to provide freedom for repeated configuration per service.

Once a workload cluster has been onboarded to TSE/TSB, you can then create the namespaces that each App Owner team will need, and label them for Istio injection. This is all that is needed for the resources in that namespace to be managed by TSE/TSB:

kubectl create namespace bookinfo

kubectl label namespace bookinfo istio-injection=enabled

Platform: Create Tetrate Workspaces

In practice, fine-grained namespaces do not accurately model the Application and Team structure that many enterprises follow. Applications are made up of multiple namespaces, often spanning multiple different clusters, zones, regions or even clouds. Teams are responsible for multiple applications, and some applications operate as 'shared services'.

For this reason, Tetrate introduces a higher-level construct called a Workspace. A workspace is the primary unit of isolation in Tetrate products and is simply a set of namespaces, in one or more clusters.

Namespaces and Workspaces Namespaces and Workspaces |

|---|

Workspaces provide a convenient higher-level abstraction that align with an organization’s applications, which typically span multiple namespaces and/or clusters.

TSB Tenants

Tetrate Service Express (TSE) provides a single Organization (for global settings) and multiple Workspaces (for individual settings). TSE is intended for use by a single team.

Tetrate Service Bridge adds an intermediate Tenant concept that allows for multiple separate teams within the top-level organization. The Tenant can apply an additional level of isolation and can override global settings on a per-team basis.

In this document, we'll assume a single team within the organization, so all settings will be applied at a Workspace level. Examples will use an organization named tse and a tenant named tse; when using TSB, you should change these to reflect your selected hierarchy.

Create Tetrate workspaces for each Application, spanning the namespaces assigned to that app:

- A list of namespaces is defined by the Workspace namespaceSelector. Entries can be limited to a single cluster

cluster-1/bookinfoor can span all clusters*/bookinfo - Note how we override the defaultSecuritySetting for each workspace using a WorkspaceSetting

cat <<EOF > bookinfo-ws.yaml

apiversion: api.tsb.tetrate.io/v2

kind: Workspace

metadata:

organization: tse

tenant: tse

name: bookinfo-ws

spec:

namespaceSelector:

names:

- "*/bookinfo"

---

apiVersion: api.tsb.tetrate.io/v2

kind: WorkspaceSetting

metadata:

organization: tse

tenant: tse

workspace: bookinfo-ws

name: bookinfo-ws-settings

spec:

defaultSecuritySetting:

authenticationSettings:

trafficMode: REQUIRED

authorization:

mode: WORKSPACE

EOF

tctl apply -f bookinfo-ws.yaml

By opening up a Workspace (authorization.mode: WORKSPACE), you create a 'bubble' in your Zero-Trust environment. All services within that workspace can communicate with each other, but must use mTLS.

Platform: Deploy Ingress Gateways

Typically, you'll want to arrange for external traffic to reach certain services within a Workspace. To do this, you should first deploy an Ingress Gateway in each workspace in each cluster. The Application Owner can later define the Gateway rules that expose their services through this Ingress Gateway.

Create a Tetrate Gateway Group

First, create a Tetrate Gateway Group that is scoped to each workspace and cluster that will host an Ingress Gateway. For example, if the Bookinfo workspace spans cluster-1 and cluster-2, you could create two Gateway groups for this workspace, one per cluster:

cat <<EOF > bookinfo-gwgroup-cluster-1.yaml

apiVersion: gateway.tsb.tetrate.io/v2

kind: Group

metadata:

name: bookinfo-gwgroup-cluster-1

organization: tse

tenant: tse

workspace: bookinfo-ws

spec:

namespaceSelector:

names:

- "cluster-1/bookinfo"

EOF

tctl apply -f bookinfo-gw-group-1.yaml

Deploy an Ingress Gateway

Next, deploy an Ingress Gateway in each workspace and cluster that is to receive external traffic:

cat <<EOF > bookinfo-ingress-gw.yaml

apiVersion: install.tetrate.io/v1alpha1

kind: IngressGateway

metadata:

name: bookinfo-ingress-gw

namespace: bookinfo

spec:

kubeSpec:

service:

type: LoadBalancer

EOF

kubectl apply -f bookinfo-ingress-gw.yaml

This step will create an envoy proxy pod in the namespace that will function as an Ingress Gateway (kubectl get pod -n bookinfo -l app=bookinfo-ingress-gw). Note that you use an IngressGateway to create a resource in a particular cluster, so you use kubectl to deploy the resource.

Later, an App Owner will want to create Gateway resources to expose their selected services. They will need to know:

- Name of the Tetrate Workspace, e.g. bookinfo-ws

- Name of the Tetrate Gateway Group on each cluster, e.g. bookinfo-gwgroup-cluster-1

- Name of the Ingress Gateway on each cluster, e.g. bookinfo-ingress-gw. The same name can be used on all clusters

Ingress Gateways are very lightweight, and running a separate Ingress Gateway per Workspace provides isolation for security and fault purposes. For very large deployments, you may wish to share Ingress Gateways between multiple Workspaces.

Platform: Enable GitOps Integration

The configuration for the Tetrate-managed platform is provided through two means:

- Platform-wide configuration is provided using

tctl, and the calling user needs to authenticate against the Tetrate API server - Per-cluster configuration is provided using

kubectl, and the calling user needs to authenticate against the Kubernetes API server

For some use cases, the user (Platform Owner or Application Owner) will need to provide both platform-wide and per-cluster configuration.

Tetrate's GitOps integration allows users to provide platform-wide configuration using the Kubernetes API. GitOps should be enabled on one or more cluster; the process installs the CRDs for the Tetrate platform-wide configuration, and any resources are automatically pushed up from the cluster to the Tetrate API server:

- Tetrate Service Express: GitOps integration is enabled by-default on Tetrate Service Express. For an overview of the integration, refer to the GitOps in TSE guide.

- Tetrate Service Bridge: You need to explicitly enable GitOps on Tetrate Service Bridge. For the details, refer to the TSB documentation Configuring GitOps.

In summary, GitOps is not just for GitOps use cases. It is useful even before an organization has adopted a GitOps posture for managing configuration; GitOps can also be used to allow selected K8s users to manage Tetrate configuration. This means that users do not have to have a Tetrate user/role, and they can use the K8s tools they are already accustomed to.

Platform: Enable Additional Integrations

You may wish to enable other integrations for your platform. For example, when operating on AWS:

- Install the AWS Load Balancer Controller for better load balancer integration

- Enable the AWS Controller to manage DNS entries for the services exposed by the App Owner