Configuring Application Gateways Using OpenAPI Annotations

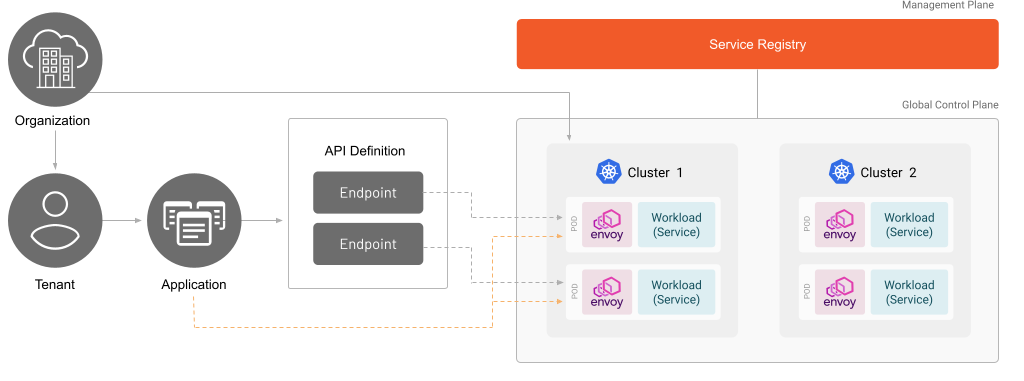

An Application in TSB represents a set of logical groupings of Services that are related to each other and expose a set of APIs that implement a complete set of business logic.

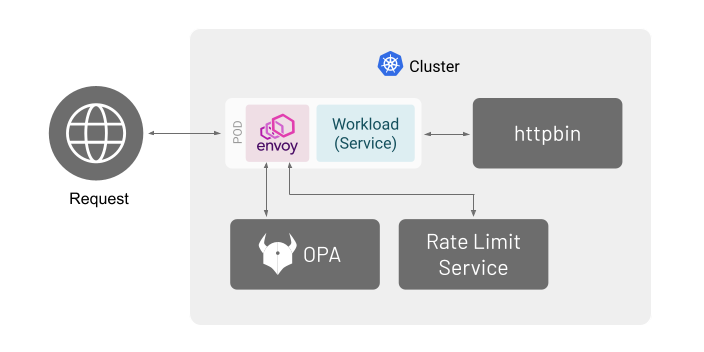

TSB can leverage OpenAPI annotations when configuring API runtime policies. In this document you will enable authorization via Open Policy Agent (OPA), as well as rate limiting through an external service. Each request will need to go through basic authorization, and for each valid user a rate limit policy will be enforced.

Before you get started, make sure you:

✓ Familiarize yourself with TSB concepts

✓ Familiarize yourself with Open Policy Agent (OPA)

✓ Familiarize yourself with Envoy external authorization and rate limit

✓ Install the TSB demo environment

✓ Familiarize yourself with Istio Bookinfo sample app

✓ Create a Tenant

Deploy httpbin Service

Follow the instructions in this document to create the httpbin service. Complete all steps in that document.

TSB Specific Annotations

The following extra TSB-specific annotations can be added to the OpenAPI specification in order to configure the API.

| Annotation | Description |

|---|---|

| x-tsb-service | The upstream service name in TSB that provides the API, as seen in the TSB service registry (you can check with tctl get services). |

| x-tsb-clusters | The names of the clusters where upstream services are exposed via an IngressGateway, EdgeGateway creation with cluster based routing and weighted distribution can be achieved using this annotation |

| x-tsb-cors | The CORS policy for the server. |

| x-tsb-tls | The TLS settings for the server. If omitted, the server will be configured to serve plain text connections. The secretName field must point to an existing Kubernetes secret in the cluster. |

| x-tsb-external-authorization | The OPA settings for the server. |

| x-tsb-ratelimiting | The external rate limit server (e.g. envoyproxy/ratelimit) settings. |

Configure the API for IngressGateway

Create the following API definition in a file called httpbin-api.yaml.

In this scenario you will only use one of the APIs (/get) that the httpbin service provides. If you want to use all of the httpbin API you can get their OpenAPI specifications from this link.

apiversion: application.tsb.tetrate.io/v2

kind: API

metadata:

organization: <organization>

tenant: <tenant>

application: httpbin

name: httpbin-ingress-gateway

spec:

description: Httpbin OpenAPI

workloadSelector:

namespace: httpbin

labels:

app: httpbin-gateway

openapi: |

openapi: 3.0.1

info:

version: '1.0-oas3'

title: httpbin

description: An unofficial OpenAPI definition for httpbin

x-tsb-service: httpbin.httpbin

servers:

- url: https://httpbin.tetrate.com

x-tsb-cors:

allowOrigin:

- "*"

x-tsb-tls:

mode: SIMPLE

secretName: httpbin-certs

paths:

/get:

get:

tags:

- HTTP methods

summary: |

Returns the GET request's data.

responses:

'200':

description: OK

content:

application/json:

schema:

type: object

Apply using tctl:

tctl apply -f httpbin-api.yaml

At this point, you should be able to send requests to the httpbin Ingress Gateway.

Since you do not control httpbin.tetrate.com, you will have to trick curl into thinking that httpbin.tetrate.com resolves to the IP address of the Ingress Gateway.

Obtain the IP address of the Ingress Gateway that you previously created using the following command.

kubectl -n httpbin get service httpbin-gateway -o jsonpath='{.status.loadBalancer.ingress[0].ip}'

Execute the following command to send HTTP requests to the httpbin service through the Ingress Gateway. Replace the gateway-ip with the value you obtained in the previous step. You also need to pass the CA cert, which you should have created in the step to deploy the httpbin service.

curl -I "https://httpbin.tetrate.com/get" \

--resolve "httpbin.tetrate.com:443:<gateway-ip>" \

--cacert httpbin.crt \

-H "X-B3-Sampled: 1"

You should see a successful HTTP response.

Configure the API for EdgeGateway

From TSB 1.7.0 onwards, you can use API object to configure an EdgeGateway to route to multiple Tier 2 clusters where IngressGateways are deployed to expose the services.

Create the following API definition in a file called httpbin-api.yaml.

In this scenario you will only use one of the APIs (/get) that the httpbin service provides. If you want to use all of the httpbin API you can get their OpenAPI specifications from this link.

apiversion: application.tsb.tetrate.io/v2

kind: API

metadata:

organization: <organization>

tenant: <tenant>

application: httpbin

name: httpbin-edge-gateway

spec:

description: Httpbin Edge Gateway OpenAPI

workloadSelector:

namespace: httpbin

labels:

app: httpbin-gateway

openapi: |

openapi: 3.0.1

info:

version: '1.0-oas3'

title: httpbin

description: An unofficial OpenAPI definition for httpbin

x-tsb-clusters:

clusters:

- name: cluster-1 # name of the cluster where httpbin service is exposed via IngressGateway

weight: 100

servers:

- url: https://httpbin.tetrate.com

x-tsb-cors:

allowOrigin:

- "*"

x-tsb-tls:

mode: SIMPLE

secretName: httpbin-certs

paths:

/get:

get:

tags:

- HTTP methods

summary: |

Returns the GET request's data.

responses:

'200':

description: OK

content:

application/json:

schema:

type: object

Apply using tctl:

tctl apply -f httpbin-api.yaml

At this point, you should be able to send requests to the httpbin Edge Gateway.

Since you do not control httpbin.tetrate.com, you will have to trick curl into thinking that httpbin.tetrate.com resolves to the IP address of the Edge Gateway.

Obtain the IP address of the Edge Gateway that you previously created using the following command.

kubectl -n httpbin get service httpbin-gateway -o jsonpath='{.status.loadBalancer.ingress[0].ip}'

Execute the following command to send HTTP requests to the httpbin service through the Edge Gateway. Replace the gateway-ip with the value you obtained in the previous step. You also need to pass the CA cert, which you should have created in the step to deploy the httpbin service.

curl -I "https://httpbin.tetrate.com/get" \

--resolve "httpbin.tetrate.com:443:<gateway-ip>" \

--cacert httpbin.crt \

-H "X-B3-Sampled: 1"

You should see a successful HTTP response.

Authorization with OPA

Once the API is properly exposed via OpenAPI annotations, OPA can be configured against the API Gateway.

In this example you will create a policy that checks for basic authentication in the request header. If the user is authenticated, the user name should be added to the x-user header so that it can be used by the rate limit service to enforce quota for each user later.

Configure OPA

Create the opa namespace, where the OPA and its configuration will be deployed to:

kubectl create namespace opa

Create a file name openapi-policy.rego:

package demo.authz

default allow = false

# username and password database

user_passwords = {

"alice": "password",

"bob": "password"

}

allow = response {

# check if password from header is same as in database for the specific user

basic_auth.password == user_passwords[basic_auth.user_name]

response := {

"allowed": true,

"headers": {"x-user": basic_auth.user_name}

}

}

basic_auth := {"user_name": user_name, "password": password} {

v := input.attributes.request.http.headers.authorization

startswith(v, "Basic ")

s := substring(v, count("Basic "), -1)

[user_name, password] := split(base64url.decode(s), ":")

}

Then create a ConfigMap using the file you created:

kubectl -n opa create configmap opa-policy \

--from-file=openapi-policy.rego

Create the Deployment and the Service objects that use the above policy configuration in a file named opa.yaml.

apiVersion: v1

kind: Service

metadata:

name: opa

namespace: opa

spec:

selector:

app: opa

ports:

- name: grpc

protocol: TCP

port: 9191

targetPort: 9191

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: opa

namespace: opa

spec:

replicas: 1

selector:

matchLabels:

app: opa

template:

metadata:

labels:

app: opa

name: opa

spec:

containers:

- image: openpolicyagent/opa:0.29.4-envoy-2

name: opa

securityContext:

runAsUser: 1111

ports:

- containerPort: 8181

args:

- 'run'

- '--server'

- '--addr=localhost:8181'

- '--diagnostic-addr=0.0.0.0:8282'

- '--set=plugins.envoy_ext_authz_grpc.addr=:9191'

- '--set=plugins.envoy_ext_authz_grpc.path=demo/authz/allow'

- '--set=decision_logs.console=true'

- '--ignore=.*'

- '/policy/openapi-policy.rego'

livenessProbe:

httpGet:

path: /health?plugins

scheme: HTTP

port: 8282

initialDelaySeconds: 5

periodSeconds: 5

readinessProbe:

httpGet:

path: /health?plugins

scheme: HTTP

port: 8282

initialDelaySeconds: 5

periodSeconds: 5

volumeMounts:

- readOnly: true

mountPath: /policy

name: opa-policy

volumes:

- name: opa-policy

configMap:

name: opa-policy

Then apply the manifest:

kubectl apply -f opa.yaml

Finally, open the httpbin-api.yaml file that you created in a previous section, and add the x-tsb-external-authorization annotation in the server component:

...

servers:

- url: https://httpbin.tetrate.com

...

x-tsb-external-authorization:

uri: grpc://opa.opa.svc.cluster.local:9191

And apply the changes again:

tctl apply -f httpbin-api.yaml

Testing

To test, execute the following command, replacing the values for username, password, and gateway-ip accordingly.

curl -u <username>:<password> \

"https://httpbin.tetrate.com/get" \

--resolve "httpbin.tetrate.com:443:<gateway-ip>" \

--cacert httpbin.crt

-s \

-o /dev/null \

-w "%{http_code}\n" \

-H "X-B3-Sampled: 1"

| Username | Password | Status Code |

|---|---|---|

alice | password | 200 |

bob | password | 200 |

<anything else> | <anything else> | 403 (*1) |

(*1) See documentation for more details

Rate limiting with external service

TSB supports internal and external mode for rate limiting. In this example you will deploy a separate Envoy rate limit service.

Configure Rate Limit

Create the ext-ratelimit namespace, where the rate limit server and its configuration will be deployed to:

kubectl create namespace ext-ratelimit

Create a file name ext-ratelimit-config.yaml. This configuration specifies that the user alice should be rate limited to 10 requests/minute and user bob should be limited to 2 requests/minute.

domain: httpbin-ratelimit

descriptors:

- key: x-user-descriptor

value: alice

rate_limit:

unit: minute

requests_per_unit: 10

- key: x-user-descriptor

value: bob

rate_limit:

unit: minute

requests_per_unit: 2

Then create a ConfigMap using the file you created:

kubectl -n ext-ratelimit create configmap ext-ratelimit \

--from-file=config.yaml=ext-ratelimit-config.yaml

You now need to deploy Redis and envoyproxy/ratelimit. Create a file called redis-ratelimit.yaml with the following contents:

# Copyright Istio Authors

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

####################################################################################

# Redis service and deployment

# Ratelimit service and deployment

####################################################################################

apiVersion: v1

kind: Service

metadata:

name: redis

namespace: ext-ratelimit

labels:

app: redis

spec:

ports:

- name: redis

port: 6379

selector:

app: redis

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: redis

namespace: ext-ratelimit

spec:

replicas: 1

selector:

matchLabels:

app: redis

template:

metadata:

labels:

app: redis

spec:

containers:

- image: redis:alpine

imagePullPolicy: Always

name: redis

ports:

- name: redis

containerPort: 6379

restartPolicy: Always

serviceAccountName: ''

---

apiVersion: v1

kind: Service

metadata:

name: ratelimit

namespace: ext-ratelimit

labels:

app: ratelimit

spec:

ports:

- name: http-port

port: 8080

targetPort: 8080

protocol: TCP

- name: grpc-port

port: 8081

targetPort: 8081

protocol: TCP

- name: http-debug

port: 6070

targetPort: 6070

protocol: TCP

selector:

app: ratelimit

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: ratelimit

namespace: ext-ratelimit

spec:

replicas: 1

selector:

matchLabels:

app: ratelimit

strategy:

type: Recreate

template:

metadata:

labels:

app: ratelimit

spec:

containers:

- image: envoyproxy/ratelimit:6f5de117 # 2021/01/08

imagePullPolicy: Always

name: ratelimit

command: ['/bin/ratelimit']

env:

- name: LOG_LEVEL

value: debug

- name: REDIS_SOCKET_TYPE

value: tcp

- name: REDIS_URL

value: redis:6379

- name: USE_STATSD

value: 'false'

- name: RUNTIME_ROOT

value: /data

- name: RUNTIME_SUBDIRECTORY

value: ratelimit

ports:

- containerPort: 8080

- containerPort: 8081

- containerPort: 6070

volumeMounts:

- name: config-volume

mountPath: /data/ratelimit/config/config.yaml

subPath: config.yaml

volumes:

- name: config-volume

configMap:

name: ratelimit-config

If everything is successful, you should have a working rate limit server. The next step is to add an annotation for x-tsb-ratelimiting to the OpenAPI object:

Next, update your OpenAPI spec by adding the following x-tsb-ratelimiting annotation in OpenAPI server object

...

servers:

- url: https://httpbin.tetrate.com

...

x-tsb-external-ratelimiting:

domain: "httpbin-ratelimit"

rateLimitServerUri: "grpc://ratelimit.ext-ratelimit.svc.cluster.local:8081"

rules:

- dimensions:

- requestHeaders:

headerName: x-user

descriptorKey: x-user-descriptor

...

Testing

To test, execute the following command, replacing the values for username, password, and gateway-ip accordingly.

curl -u <username>:<password> \

"https://httpbin.tetrate.com/get" \

--resolve "httpbin.tetrate.com:443:<gateway-ip>" \

--cacert httpbin.crt

-s \

-o /dev/null \

-w "%{http_code}\n" \

-H "X-B3-Sampled: 1"

First, try sending multiple requests using the username alice and password password. You should receive the status code 200 until the 10th request. After that you should receive 429 responses, until 10 minutes have passed.

Try the same with username bob and password password. The behavior should be identical, except that this time you should only be able to send 2 requests before starting to receive 429 responses.

Policy ordering

TSB does not currently support specifying explicit policy order.

Instead, the creation timestamp for the configurations will implicitly be used. Therefore, the execution order cannot be guaranteed if you specify the external authorization and rate limiting services in one go.

This is why in this document the external authorization and rate limiting configurations were applied in two separate steps, in this specific order. This way the authorization processing is performed before the rate limiting.