Priority based Multi-cluster Traffic routing and Failover

When designing a resilient and efficient system architecture, one often encounters the need to manage traffic distribution within the local cluster and nodes versus distributing it across different clusters based on regions or fault domains. This decision heavily impacts the system's reliability, latency, and fault tolerance.

This document outlines the configuration of failoverSettings for achieving failovers between Gateways and individual services. These settings can be directly configured on the Gateway resource and through ServiceTrafficSetting for individual services. It also details multi-cluster traffic routing and failover using topologyChoice and failoverPriority.

What is the use-case?

Here are some of the common scenarios that can be addressed using failoverSettings

Global Traffic Distribution

- Distributing traffic across different clusters based on regions or fault domains enhances fault tolerance and resilience against regional failures.

- It allows for load balancing and scaling services based on geographical proximity to users, optimizing latency and providing better user experience.

Active-ActiveandActive-Standbyendpoint groups define how traffic is managed across multiple clusters for failover scenarios.Active-Activeconfigurations distribute traffic evenly across multiple instances or clusters, whileActive-Standbysetups designate primary and secondary instances for failover.

Local Traffic Management

- Keeping the traffic within the local cluster and nodes ensures low latency and high throughput as communication occurs within the same network boundary.

- It is suitable for scenarios where services communicate frequently and require fast data transfer without incurring the overhead network hops.

- Ability to configure service to service communications local to the cluster within nodes vs across different availability zones specific to set of services using Workspace Settings or as an Organization Settings.

How to Configure FailoverSettings?

In this guide, you are going to -

✓ Deploy bookinfo application distributed across multiple application clusters with an IngressGateway deployed on each.

✓ Deploy Gateway resource in one of the cluster as Tier1 Gateway to distribute the traffic to multiple application clusters configured as Tier2.

✓ Configure FailoverSettings with different configuration options to load balance and failover the services within the same availability zone as well as across multiple regions.

Before you get started, make sure:

✓ TSB is up and running, and GitOps has been enabled for the target cluster

✓ Familiarize yourself with TSB concepts

✓ Completed TSB usage quickstart. This document assumes you are familiar with Tenant Workspace and Config Groups.

Scenario: Global Traffic Distribution Using FailoverPriority

Starting from TSB version 1.10.0, failoverPriority can be directly configured on the following TSB resources.

FailoverPrioritycane configured as an organization wide settings using OrganizationSettings to configure global traffic distribution.FailoverPrioritycan be configured directly on Gateway resource.FailoverPrioritycan be configured for set of applications under a namespace using WorkspaceSettings resource.FailoverPrioritycan be configured for individual services using ServiceTrafficSetting resource.

What is the use-case?

In a multi-cluster scenario, where a Tier1 Gateway is responsible for routing traffic to multiple Tier2 clusters deployed across cp-cluster-1, cp-cluster-2, and cp-cluster-3 provisioned across different regions, you can utilize TSB specific labels with prefixes like failover.tetrate.io/* to configure fault domains or grouping. This allows for defining active-active and active-standby sets of Tier2 Gateway endpoints, ensuring fault tolerance and resilience against regional failures.

In this setup, we have provisioned cp-cluster-1 and cp-cluster-2 in one region i.e us-west-1 and cp-cluster-3 in different region i.e us-east-1

Deploy Bookinfo application

Create the namespace payment in cp-cluster-1, cp-cluster-2 & cp-cluster-3 and enable istio-injection: enabled.

kubectl create namespace payment

kubectl label namespace payment istio-injection=enabled --overwrite=true

kubectl apply -f https://docs.tetrate.io/examples/flagger/bookinfo.yaml -n payment

Create TSB configurations Tenant,Workspace,Group for bookinfo

apiVersion: tsb.tetrate.io/v2

kind: Tenant

metadata:

name: payment

annotations:

tsb.tetrate.io/organization: tetrate

spec:

displayName: Payment

---

apiVersion: tsb.tetrate.io/v2

kind: Workspace

metadata:

name: payment-ws

annotations:

tsb.tetrate.io/organization: tetrate

tsb.tetrate.io/tenant: payment

spec:

namespaceSelector:

names:

- "cp-cluster-1/payment"

- "cp-cluster-2/payment"

- "cp-cluster-3/payment"

displayName: payment-ws

Apply configuration using kubectl

kubectl apply -f bookinfo.yaml -n payment

Create Bookinfo IngressGateway Configuration

apiVersion: gateway.tsb.tetrate.io/v2

kind: Group

metadata:

name: bookinfo-gg

namespace: bookinfo

annotations:

tsb.tetrate.io/organization: tetrate

tsb.tetrate.io/tenant: payment

tsb.tetrate.io/workspace: payment-ws

spec:

displayName: Bookinfo Gateway Group

namespaceSelector:

names:

- "cp-cluster-1/payment"

- "cp-cluster-2/payment"

- "cp-cluster-3/payment"

configMode: BRIDGED

---

apiVersion: gateway.tsb.tetrate.io/v2

kind: Gateway

metadata:

name: bookinfo-ingress-gateway

annotations:

tsb.tetrate.io/organization: tetrate

tsb.tetrate.io/tenant: payment

tsb.tetrate.io/workspace: payment-ws

tsb.tetrate.io/gatewayGroup: bookinfo-gg

spec:

displayName: Bookinfo Ingress

workloadSelector:

namespace: payment

labels:

app: bookinfo-gateway

http:

- hostname: bookinfo.tetrate.io

name: bookinfo-tetrate

port: 80

routing:

rules:

- route:

serviceDestination:

host: payment/productpage.payment.svc.cluster.local

port: 9080

Apply configuration using kubectl

kubectl apply -f bookinfo.yaml -n payment

Create Gateway as Tier1 Gateway to distribute the traffic to Tier2 Gateways deployed in cp-cluster-1, cp-cluster-2 & cp-cluster-3

apiVersion: tsb.tetrate.io/v2

kind: Tenant

metadata:

name: tier1

annotations:

tsb.tetrate.io/organization: tetrate

spec:

displayName: Tier1

---

apiVersion: tsb.tetrate.io/v2

kind: Workspace

metadata:

name: tier1-ws

annotations:

tsb.tetrate.io/organization: tetrate

tsb.tetrate.io/tenant: tier1

spec:

displayName: Tier1

namespaceSelector:

names:

- "cp-cluster-3/tier1"

---

apiVersion: gateway.tsb.tetrate.io/v2

kind: Group

metadata:

name: tier1-gg

annotations:

tsb.tetrate.io/organization: tetrate

tsb.tetrate.io/tenant: tier1

tsb.tetrate.io/workspace: tier1-ws

spec:

displayName: Tier1 Gateway Group

namespaceSelector:

names:

- "cp-cluster-3/tier1"

configMode: BRIDGED

---

apiVersion: install.tetrate.io/v1alpha1

kind: Tier1Gateway

metadata:

name: tier1-gateway

namespace: tier1

spec:

type: INGRESS

kubeSpec:

service:

type: LoadBalancer

---

apiVersion: gateway.tsb.tetrate.io/v2

kind: Gateway

metadata:

name: t1-gateway

annotations:

tsb.tetrate.io/organization: tetrate

tsb.tetrate.io/tenant: tier1

tsb.tetrate.io/workspace: tier1-ws

tsb.tetrate.io/gatewayGroup: tier1-gg

spec:

displayName: T1 Gateway

workloadSelector:

namespace: tier1

labels:

app: tier1-gateway

http:

- hostname: bookinfo.tetrate.io

port: 80

name: bookinfo-tetrate

routing:

rules:

- route:

clusterDestination: {}

Apply configuration using kubectl

kubectl apply -f gateway-config.yaml -n tier1

How FailoverPriority Labels are Configured

To configure failover priority labels for each Tier2 Gateway proxy endpoints, you have to label the Bookinfo Tier2 Gateway services as shown below

cp-cluster-1 & cp-cluster-2: active-active

apiVersion: v1

kind: Service

metadata:

labels:

app: bookinfo-gateway

failover.tetrate.io/fault-domain: active-active

cp-cluster-3: active-standby

apiVersion: v1

kind: Service

metadata:

labels:

app: bookinfo-gateway

failover.tetrate.io/fault-domain: active-standby

Default Behaviour

By default TSB configures topologyChoice: CLUSTER, which keeps the traffic within the same nodes/cluster based on locality of the originating client.

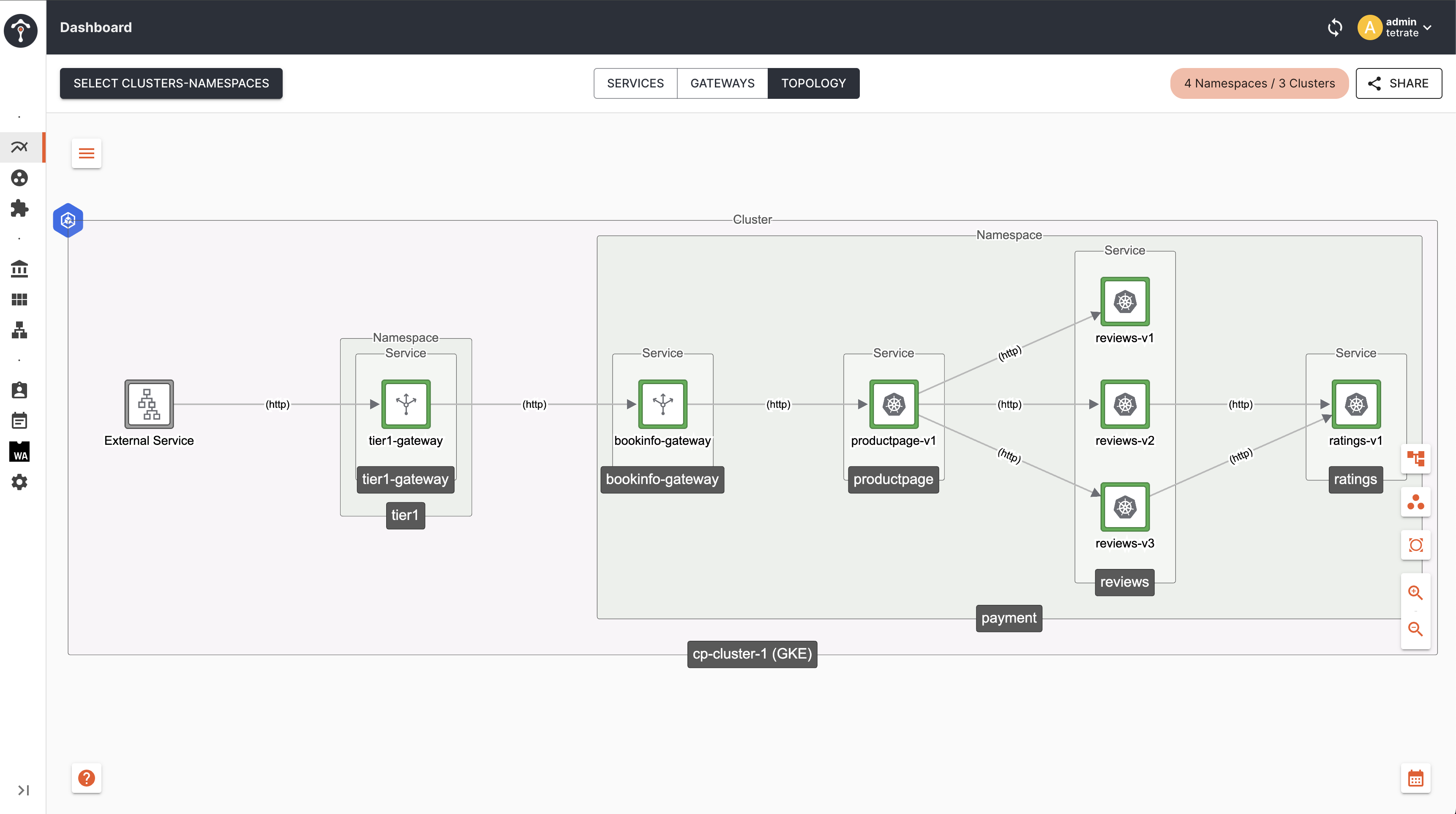

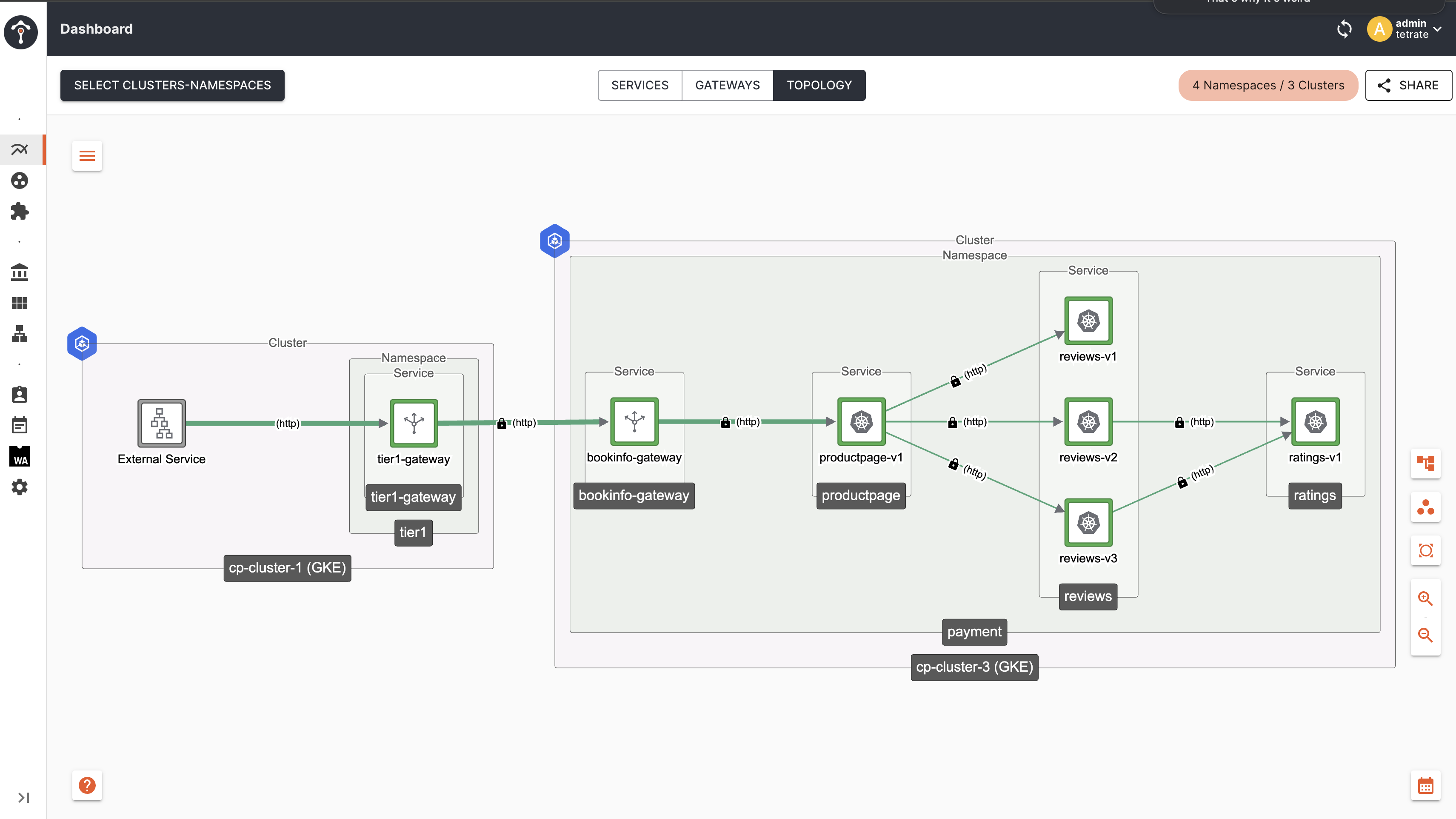

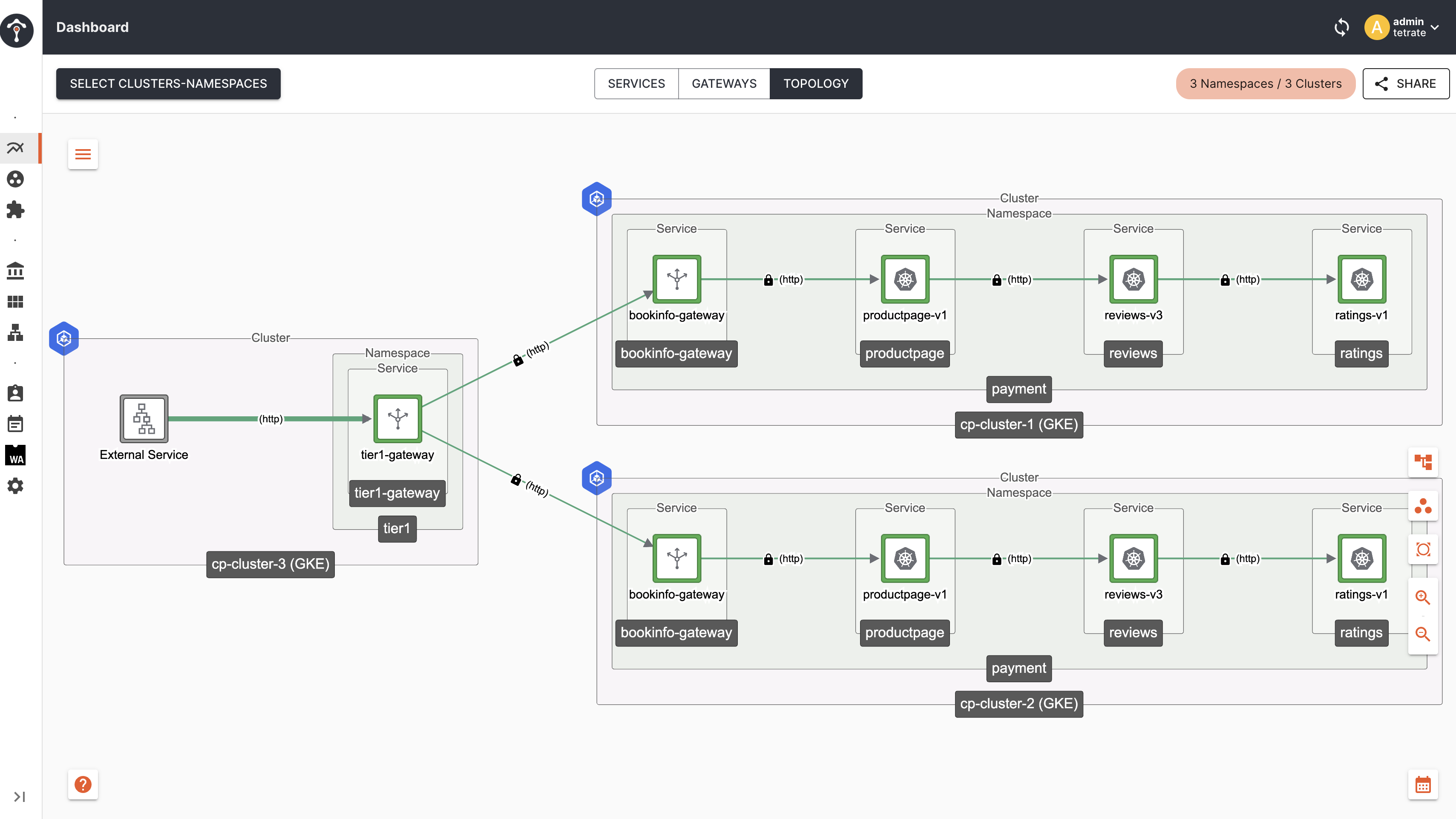

Topology

As you can see, traffic from Tier1 Gateway to Tier2 Gateway and to productpage remains within the same cluster i.e cp-cluster-1.

Configure FailoverPriority Settings for Gateway

You can patch your existing Tier1 Gateway resource to update the failoverPriority settings. Configure custom failoverPriority label based on fault-domain using a TSB specific failover annotation i.e failover.tetrate.io/<FAILOVER_LABEL>

apiVersion: gateway.tsb.tetrate.io/v2

kind: Gateway

metadata:

name: t1-gateway

annotations:

tsb.tetrate.io/organization: tetrate

tsb.tetrate.io/tenant: tier1

tsb.tetrate.io/workspace: tier1-ws

tsb.tetrate.io/gatewayGroup: tier1-gg

spec:

displayName: T1 Gateway

workloadSelector:

namespace: tier1

labels:

app: tier1-gateway

http:

- hostname: bookinfo.tetrate.io

port: 80

name: bookinfo-tetrate

routing:

rules:

- route:

clusterDestination: {}

failoverSettings:

failoverPriority:

- failover.tetrate.io/fault-domain

Apply using kubectl

kubectl apply -f tier1-gateway.yaml -n tier1

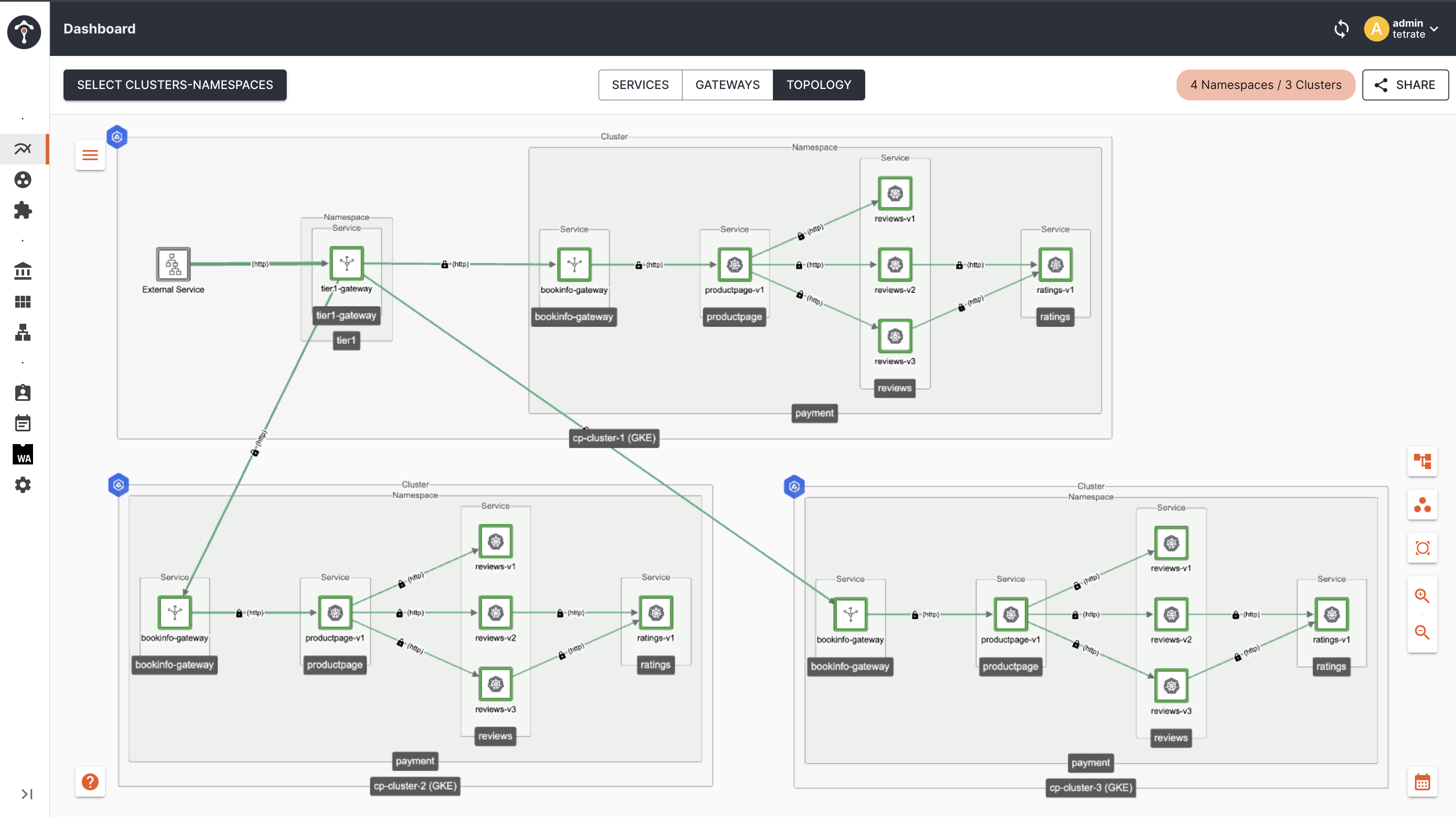

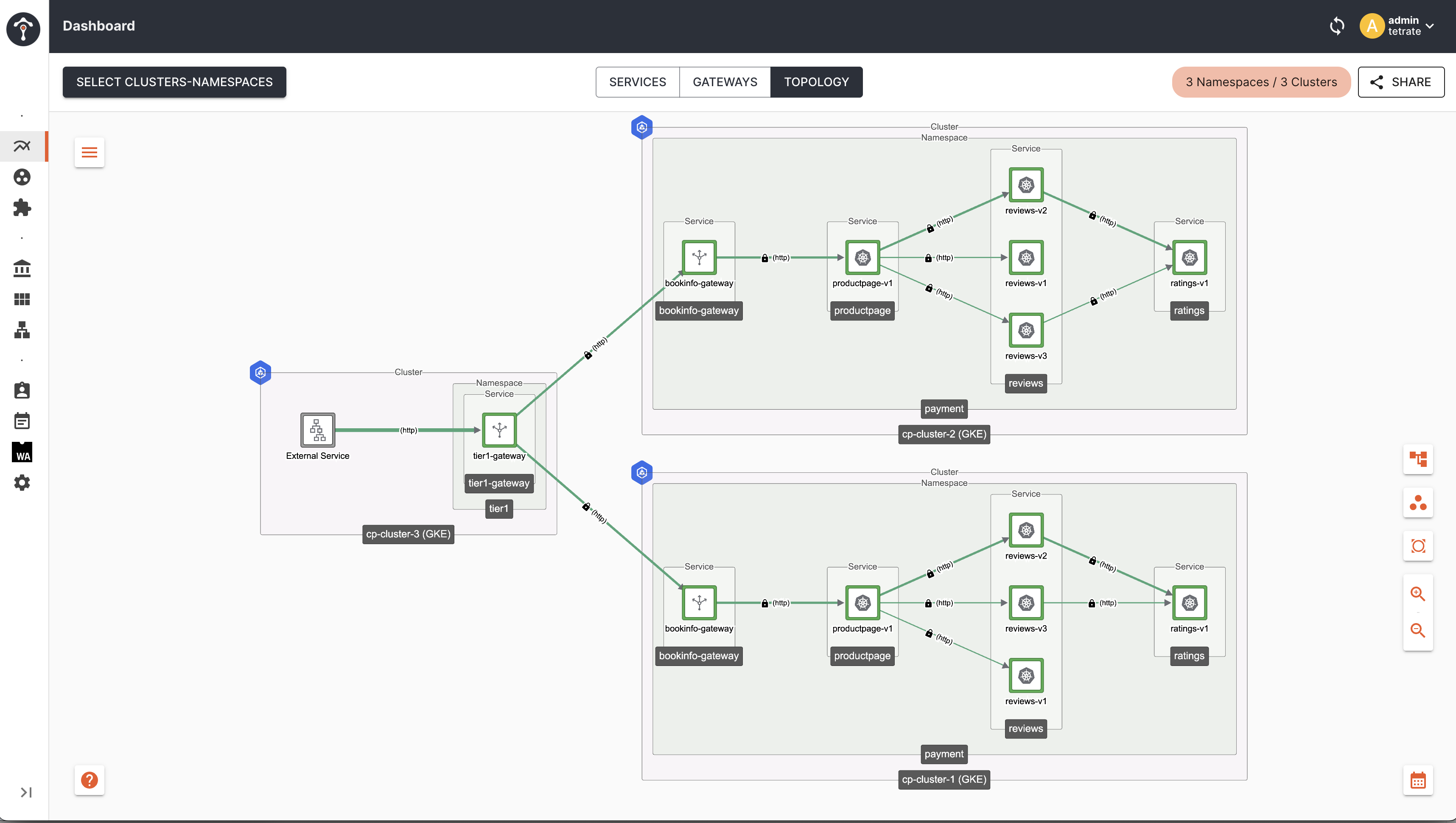

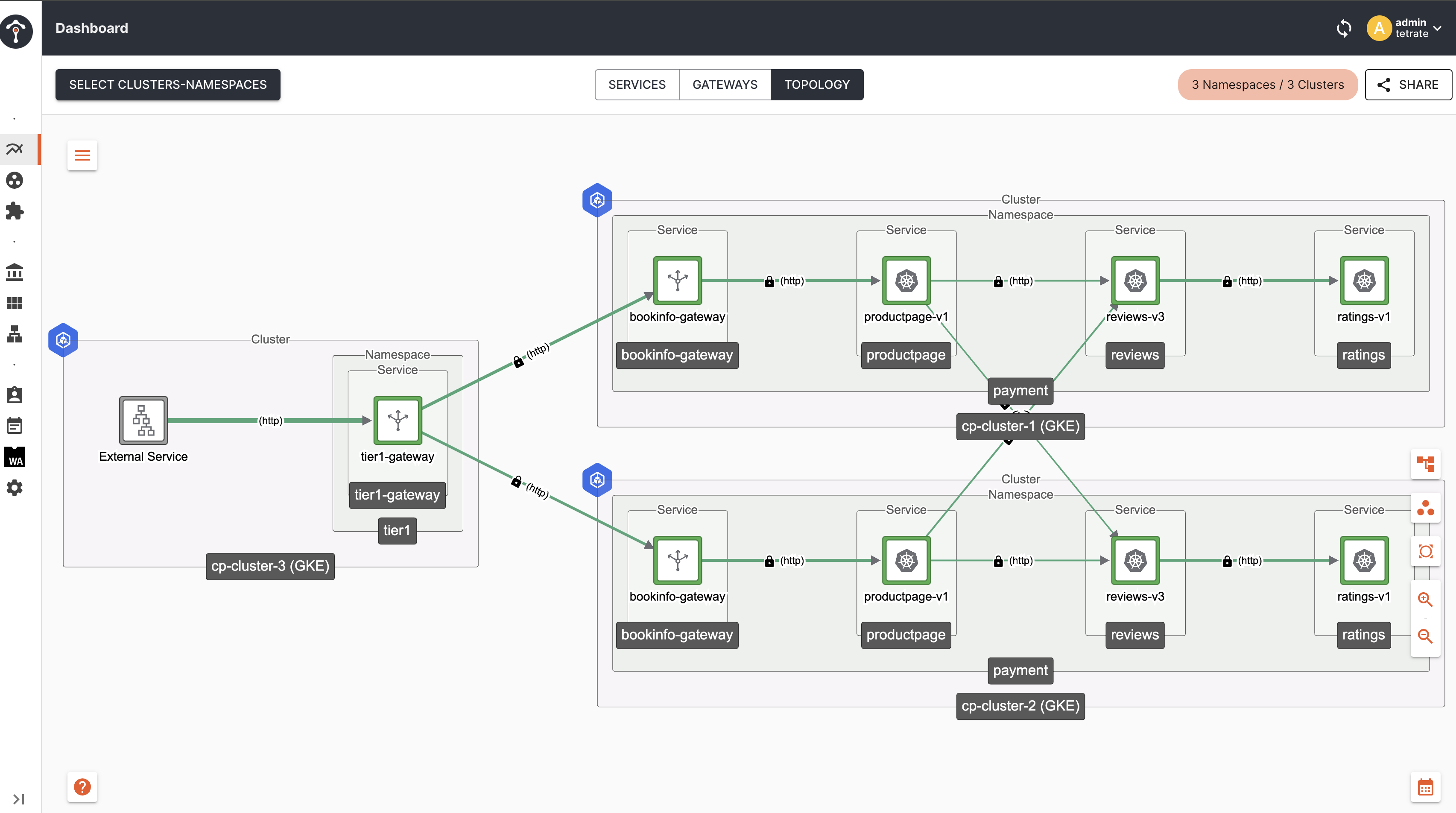

Topology

As you can see, now traffic is distributed across all Tier2 Gateway endpoints irrespective of their locality. This is happening because, we have not configured failover.tetrate.io labels in the client proxy ie Tier1 Gateway in cluster-1, hence it is giving equal priority to all endpoints across cluster-1, cluster-2 and cluster-3.

Apply Custom Failover Priority Labels at the Client Workloads

Modify tier1-gateway workloads and add the following label. This enforces istio/envoy to only match those endpoints which are having the same fault-domain labels i.e failover.tetrate.io/fault-domain: active-active.

apiVersion: install.tetrate.io/v1alpha1

kind: Gateway

metadata:

name: tier1-gateway

namespace: tier1

spec:

type: INGRESS

kubeSpec:

service:

type: LoadBalancer

overlays:

- apiVersion: apps/v1

kind: Deployment

name: tier1-gateway

patches:

- path: spec.template.metadata.labels.failover\.tetrate\.io/fault-domain

value: active-active

Apply using kubectl

kubectl apply -f tier1-gateway-patch -n tier1

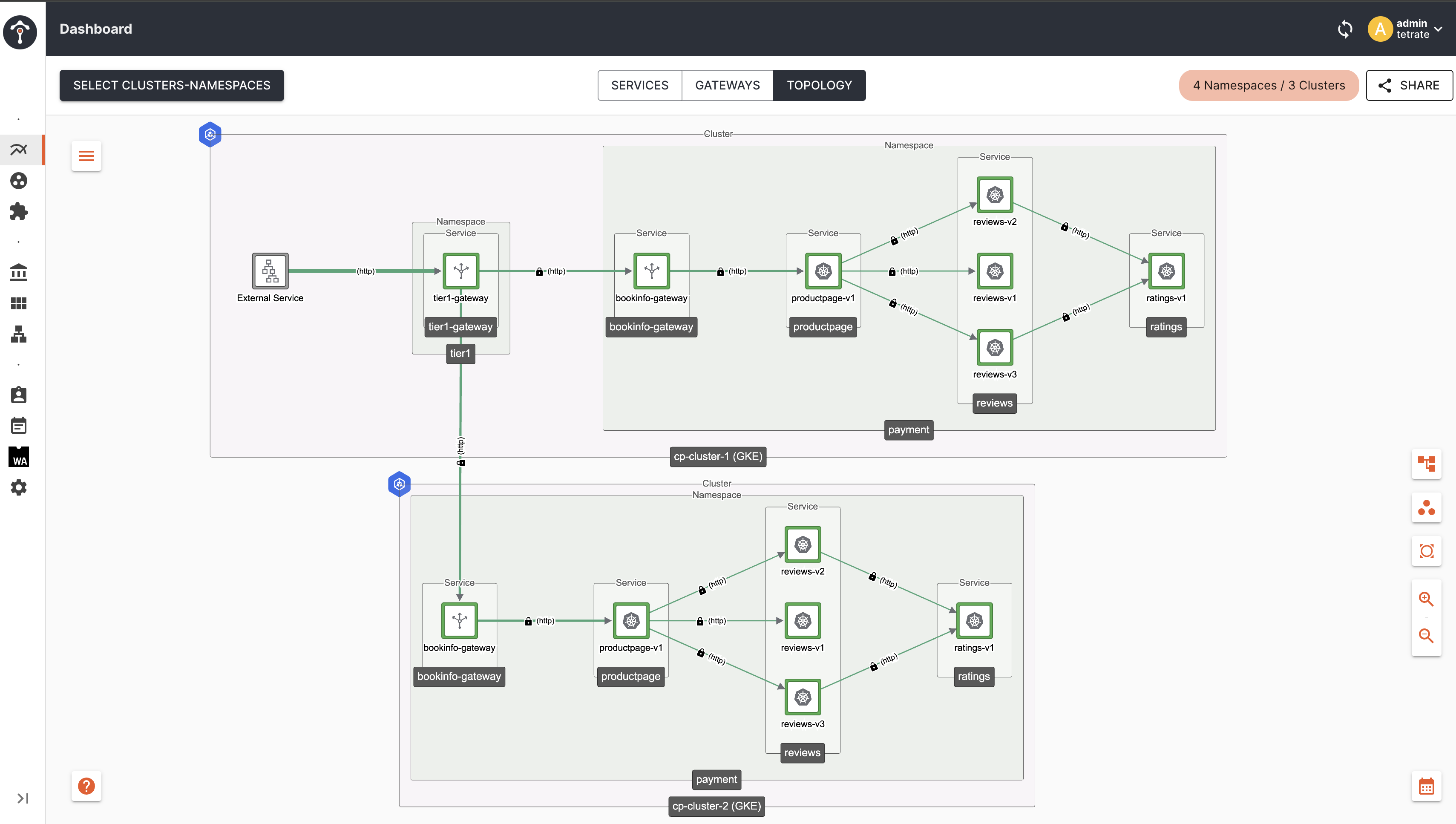

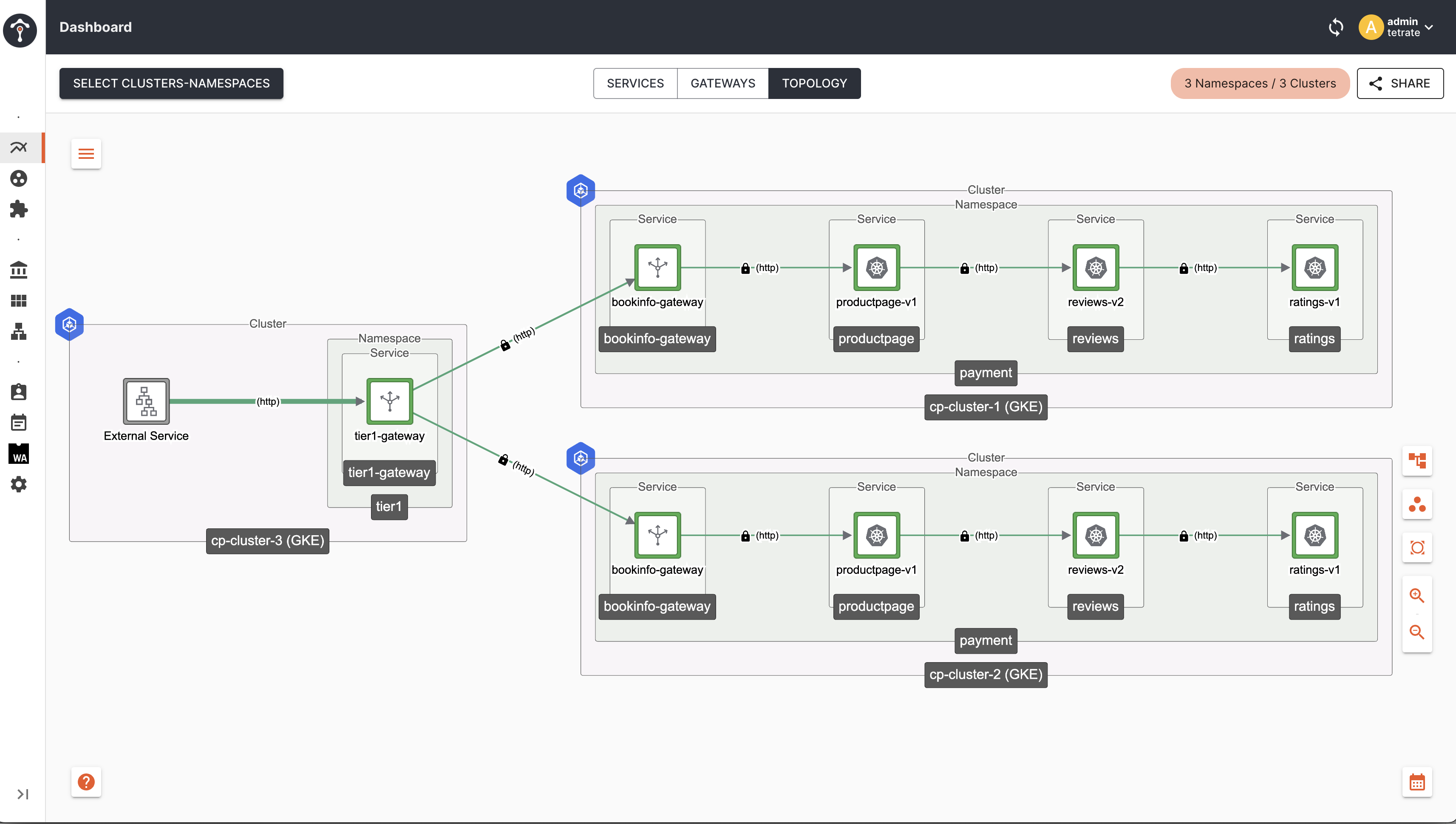

Topology

As you can see, now the traffic is limited to only cp-cluster-1 and cp-cluster-2 which is matching failover.tetrate.io/fault-domain: active-active label with corresponding Tier2 Gateway Endpoints.

Trigger Failover

Now we are going to trigger failover within active-active domain and then to active-standby domain

Failover within active-active domain

Scale down Tier2 Gateway workloads in cp-cluster-1

kubectl scale --replicas=0 deployment bookinfo-gateway -n payment

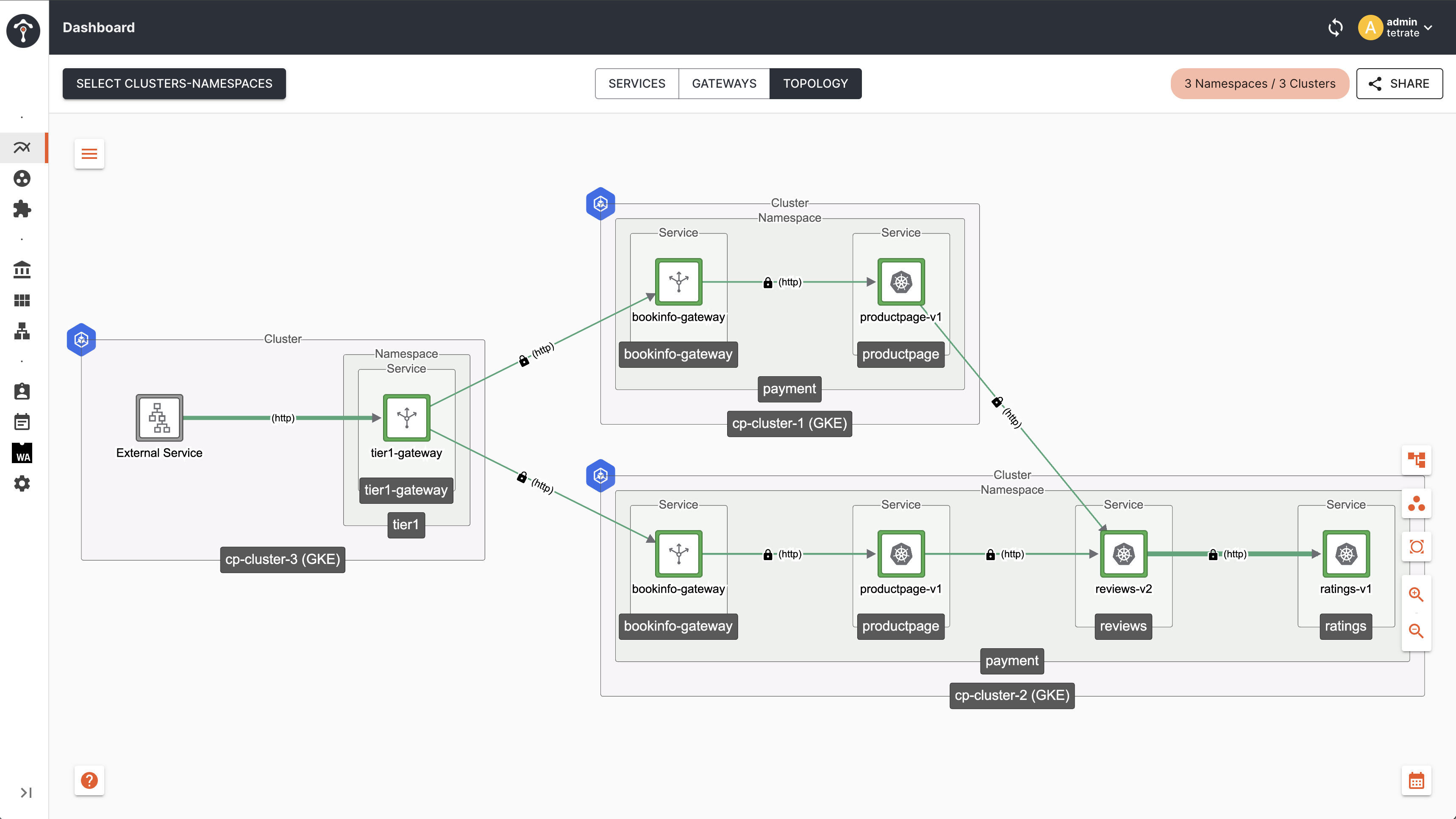

Topology

As you can see, now the traffic failed over to cp-cluster-2 as the Tier2 Gateway endpoints in cp-cluster-2 are matching with client ( Tier1 Gateway ) label i.e failover.tetrate.io/fault-domain: active-active

Failover from active-active to active-standby

Scale down Tier2 Gateway workloads in cp-cluster-2

kubectl scale --replicas=0 deployment bookinfo-gateway -n payment

Topology

As you can see, now the traffic failed over to cp-cluster-3 based on failover priority label key failover.tetrate.io/fault-domain: active-standby

Scenario: Service to Service Routing & Failover

Starting from TSB 1.10.0, service-to-service failover can be configured for individual services using ServiceTrafficSetting

What is the use-case?

Consider a scenario where different versions of a particular service has been deployed across multiple clusters, and the user would like to route the traffic always to a specific version. If the desired version of the service is not available locally within the cluster, the user expect it to failover to same instance of the service with the same version in a different cluster.

In this example, we have bookinfo deployed on cp-cluster-1 and cp-cluster-2 and exposed over an IngressGateway. We have a dedicated cluster cp-cluster-3 where the Tier1 Gateway has been deployed to route to both cp-cluster-1 and cp-cluster-2.

We have configured productpage to always route to reviews-v2 service using ServiceRoute, once the reviews-v2 becomes unavailable in any of the clusters, we expect it to failover to the other clusters where reviews-v2 service is available.

Deploy Bookinfo application

Create the namespace payment in cp-cluster-1, cp-cluster-2 and enable istio-injection: enabled.

kubectl create namespace payment

kubectl label namespace payment istio-injection=enabled --overwrite=true

kubectl apply -f https://docs.tetrate.io/examples/flagger/bookinfo.yaml -n payment

Create TSB configurations Tenant,Workspace,Group for bookinfo

apiVersion: tsb.tetrate.io/v2

kind: Tenant

metadata:

name: payment

annotations:

tsb.tetrate.io/organization: tetrate

spec:

displayName: Payment

---

apiVersion: tsb.tetrate.io/v2

kind: Workspace

metadata:

name: payment-ws

annotations:

tsb.tetrate.io/organization: tetrate

tsb.tetrate.io/tenant: payment

spec:

namespaceSelector:

names:

- "cp-cluster-1/payment"

- "cp-cluster-2/payment"

displayName: payment-ws

Apply configuration using kubectl

kubectl apply -f bookinfo.yaml -n payment

Create Bookinfo IngressGateway Configuration

apiVersion: gateway.tsb.tetrate.io/v2

kind: Group

metadata:

name: bookinfo-gg

namespace: bookinfo

annotations:

tsb.tetrate.io/organization: tetrate

tsb.tetrate.io/tenant: payment

tsb.tetrate.io/workspace: payment-ws

spec:

displayName: Bookinfo Gateway Group

namespaceSelector:

names:

- "cp-cluster-1/payment"

- "cp-cluster-2/payment"

configMode: BRIDGED

---

apiVersion: gateway.tsb.tetrate.io/v2

kind: Gateway

metadata:

name: bookinfo-ingress-gateway

annotations:

tsb.tetrate.io/organization: tetrate

tsb.tetrate.io/tenant: payment

tsb.tetrate.io/workspace: payment-ws

tsb.tetrate.io/gatewayGroup: bookinfo-gg

spec:

displayName: Bookinfo Ingress

workloadSelector:

namespace: payment

labels:

app: bookinfo-gateway

http:

- hostname: bookinfo.tetrate.io

name: bookinfo-tetrate

port: 80

routing:

rules:

- route:

serviceDestination:

host: payment/productpage.payment.svc.cluster.local

port: 9080

Apply configuration using kubectl

kubectl apply -f bookinfo.yaml -n payment

Create Gateway as Tier1 Gateway to distribute the traffic to Tier2 Gateways deployed in cp-cluster-1 & cp-cluster-2.

apiVersion: tsb.tetrate.io/v2

kind: Tenant

metadata:

name: tier1

annotations:

tsb.tetrate.io/organization: tetrate

spec:

displayName: Tier1

---

apiVersion: tsb.tetrate.io/v2

kind: Workspace

metadata:

name: tier1-ws

annotations:

tsb.tetrate.io/organization: tetrate

tsb.tetrate.io/tenant: tier1

spec:

displayName: Tier1

namespaceSelector:

names:

- "cp-cluster-3/tier1"

---

apiVersion: gateway.tsb.tetrate.io/v2

kind: Group

metadata:

name: tier1-gg

annotations:

tsb.tetrate.io/organization: tetrate

tsb.tetrate.io/tenant: tier1

tsb.tetrate.io/workspace: tier1-ws

spec:

displayName: Tier1 Gateway Group

namespaceSelector:

names:

- "cp-cluster-3/tier1"

configMode: BRIDGED

---

apiVersion: install.tetrate.io/v1alpha1

kind: Tier1Gateway

metadata:

name: tier1-gateway

namespace: tier1

spec:

type: INGRESS

kubeSpec:

service:

type: LoadBalancer

---

apiVersion: gateway.tsb.tetrate.io/v2

kind: Gateway

metadata:

name: t1-gateway

annotations:

tsb.tetrate.io/organization: tetrate

tsb.tetrate.io/tenant: tier1

tsb.tetrate.io/workspace: tier1-ws

tsb.tetrate.io/gatewayGroup: tier1-gg

spec:

displayName: T1 Gateway

workloadSelector:

namespace: tier1

labels:

app: tier1-gateway

http:

- hostname: bookinfo.tetrate.io

port: 80

name: bookinfo-tetrate

routing:

rules:

- route:

clusterDestination: {}

Apply configuration using kubectl

kubectl apply -f gateway-config.yaml -n tier2

Default Behaviour

By default TSB configures topologyChoice: CLUSTER, which keeps the traffic within the same nodes/cluster based on locality of the originating client.

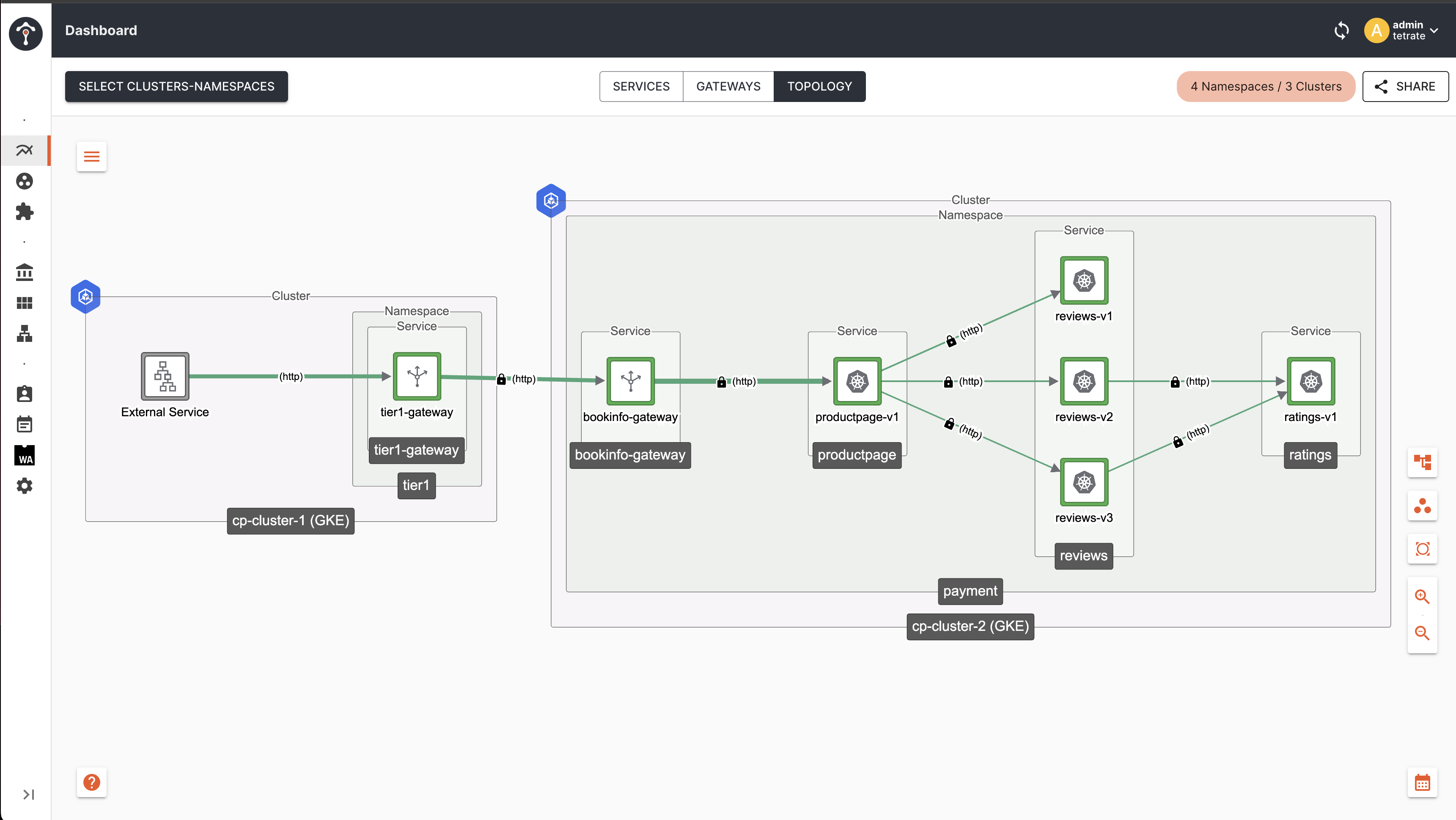

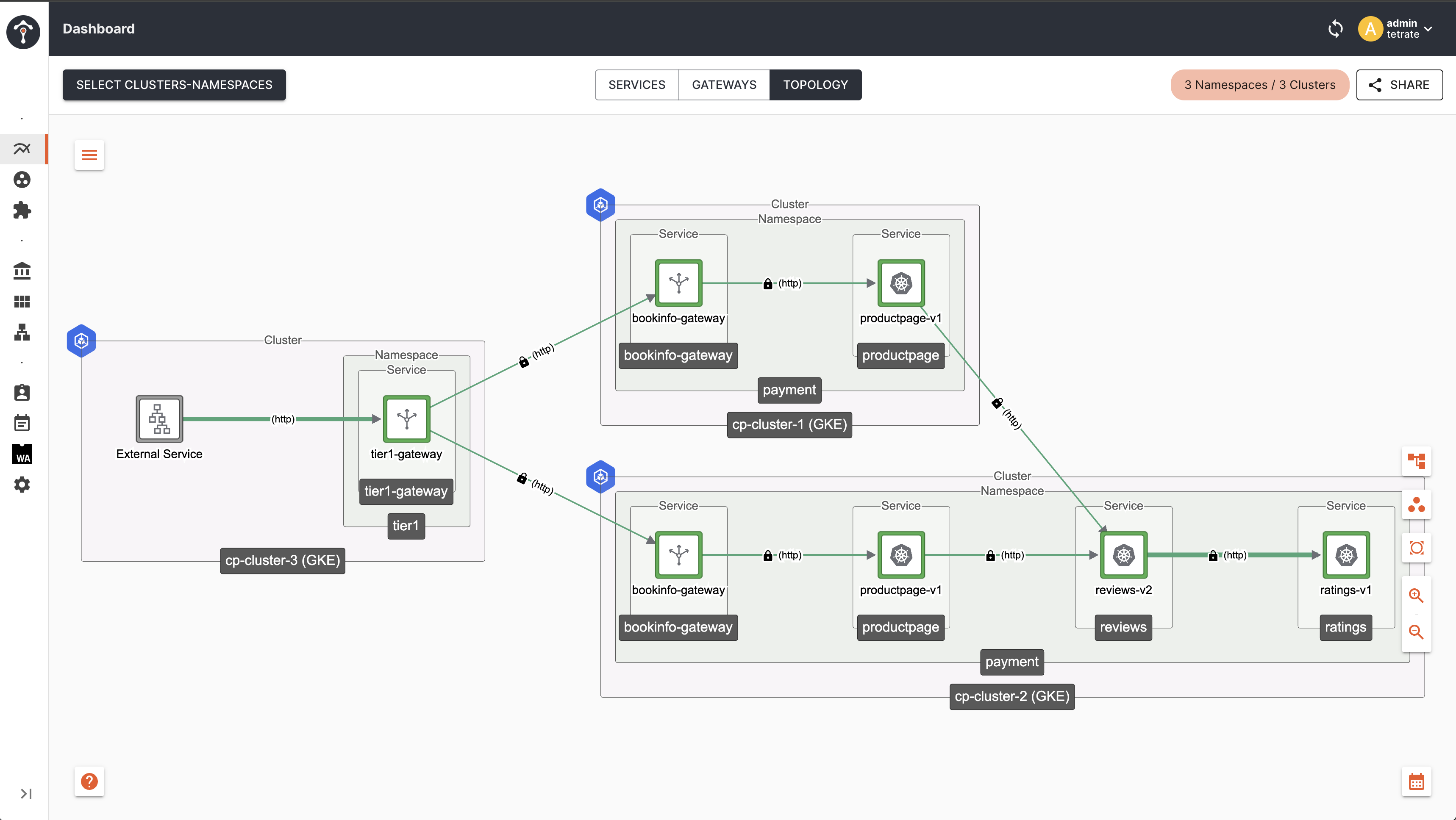

Topology

As you can see, traffic from productpage getting distributed to all the reviews services irrespective of their version.

Configure ServiceRoute

Configure ServiceRoute for reviews version v2

apiVersion: traffic.tsb.tetrate.io/v2

kind: ServiceRoute

metadata:

name: reviews-sr

annotations:

tsb.tetrate.io/organization: tetrate

tsb.tetrate.io/tenant: payment

tsb.tetrate.io/workspace: payment-ws

tsb.tetrate.io/trafficGroup: payment-tg

spec:

service: payment/reviews.payment.svc.cluster.local

subsets:

- name: v2

labels:

version: v2

Apply using kubectl

kubectl apply -f reviews-sr.yaml -n payment

Configure ServiceTrafficSetting

Configure failover priority for reviews service and limit the failover to only version v2 using ServiceTrafficSetting.

apiVersion: traffic.tsb.tetrate.io/v2

kind: ServiceTrafficSetting

metadata:

name: payment-sts

annotations:

tsb.tetrate.io/organization: tetrate

tsb.tetrate.io/tenant: payment

tsb.tetrate.io/workspace: payment-ws

tsb.tetrate.io/trafficGroup: payment-tg

spec:

service: payment/reviews.payment.svc.cluster.local

settings:

inbound:

failoverSettings:

failoverPriority:

- "version=v2"

- "topology.istio.io/network"

Apply using kubectl

kubectl apply -f reviews-sts.yaml -n payment

Topology

As you can see, productpage only route to reviews-v2 within the same cluster.

Trigger Failover

Trigger failover by scaling down reviews-v2 in cp-cluster-1.

Topology

When reviews-v2 becomes unavailable in cp-cluster-1, productpage will only route to reviews-v2 in cp-cluster-2, won't prefer any other version of reviews.

Scenario: Local Traffic Management Using TopologyChoice

Starting from TSB version 1.9.0, topologyChoice can be used under WorkspaceSettings for set of application workloads or as an organization wide settings under OrganizationSettings to configure traffic management.

apiVersion: tsb.tetrate.io/v2

kind: WorkspaceSetting

metadata:

name: workspace-setting

annotations:

tsb.tetrate.io/organization: <organization_name>

tsb.tetrate.io/tenant: <tenant_name>

tsb.tetrate.io/workspace: <workspace_name>

spec:

defaultTrafficSetting:

inbound:

failoverSettings:

topologyChoice: CLUSTER or LOCALITY

There are 2 options:-

- CLUSTER: Gives higher priority to endpoints which are local to the cluster using

failoverPrioritylabels configured for each proxy endpoints. - LOCALITY: Gives higher priority based on locality ( region/zone/subzone ) than endpoints which are local to the cluster.

Configuration

In this example, we are going to expose only reviews-v3 service in both cp-cluster-1 and cp-cluster-2 for EastWest routing and see how the load balancing behaviour is going to change based on topologyChoice configuration.

Deploy Bookinfo application

Create the namespace payment in both cp-cluster-1 and cp-cluster-2 and enable istio-injection: enabled.

kubectl create namespace payment

kubectl label namespace payment istio-injection=enabled --overwrite=true

kubectl apply -f https://docs.tetrate.io/examples/flagger/bookinfo.yaml -n payment

Create TSB configurations Tenant,Workspace,Group for bookinfo

apiVersion: tsb.tetrate.io/v2

kind: Tenant

metadata:

name: payment

annotations:

tsb.tetrate.io/organization: tetrate

spec:

displayName: Payment

---

apiVersion: tsb.tetrate.io/v2

kind: Workspace

metadata:

name: payment-ws

annotations:

tsb.tetrate.io/organization: tetrate

tsb.tetrate.io/tenant: payment

spec:

namespaceSelector:

names:

- "cp-cluster-1/payment"

- "cp-cluster-2/payment"

displayName: payment-ws

Apply configuration using kubectl

kubectl apply -f bookinfo.yaml -n payment

Create Bookinfo IngressGateway Configuration

apiVersion: gateway.tsb.tetrate.io/v2

kind: Group

metadata:

name: bookinfo-gg

namespace: bookinfo

annotations:

tsb.tetrate.io/organization: tetrate

tsb.tetrate.io/tenant: payment

tsb.tetrate.io/workspace: payment-ws

spec:

displayName: Bookinfo Gateway Group

namespaceSelector:

names:

- "cp-cluster-1/payment"

- "cp-cluster-2/payment"

configMode: BRIDGED

---

apiVersion: gateway.tsb.tetrate.io/v2

kind: Gateway

metadata:

name: bookinfo-ingress-gateway

annotations:

tsb.tetrate.io/organization: tetrate

tsb.tetrate.io/tenant: payment

tsb.tetrate.io/workspace: payment-ws

tsb.tetrate.io/gatewayGroup: bookinfo-gg

spec:

displayName: Bookinfo Ingress

workloadSelector:

namespace: payment

labels:

app: bookinfo-gateway

http:

- hostname: bookinfo.tetrate.io

name: bookinfo-tetrate

port: 80

routing:

rules:

- route:

serviceDestination:

host: payment/productpage.payment.svc.cluster.local

port: 9080

Apply configuration using kubectl

kubectl apply -f bookinfo.yaml -n payment

Deploy Gateway as Tier1 Gateway in cp-cluster-3 to distribute the traffic to cp-cluster-1 and cp-cluster-2

apiVersion: tsb.tetrate.io/v2

kind: Tenant

metadata:

name: tier1

annotations:

tsb.tetrate.io/organization: tetrate

spec:

displayName: Tier1

---

apiVersion: tsb.tetrate.io/v2

kind: Workspace

metadata:

name: tier1-ws

annotations:

tsb.tetrate.io/organization: tetrate

tsb.tetrate.io/tenant: tier1

spec:

displayName: Tier1

namespaceSelector:

names:

- "cp-cluster-3/tier1"

---

apiVersion: gateway.tsb.tetrate.io/v2

kind: Group

metadata:

name: tier1-gg

annotations:

tsb.tetrate.io/organization: tetrate

tsb.tetrate.io/tenant: tier1

tsb.tetrate.io/workspace: tier1-ws

spec:

displayName: Tier1 Gateway Group

namespaceSelector:

names:

- "cp-cluster-3/tier1"

configMode: BRIDGED

---

apiVersion: install.tetrate.io/v1alpha1

kind: Gateway

metadata:

name: tier1-gateway

namespace: tier1

spec:

type: INGRESS

kubeSpec:

service:

type: LoadBalancer

---

apiVersion: gateway.tsb.tetrate.io/v2

kind: Gateway

metadata:

name: t1-gateway

annotations:

tsb.tetrate.io/organization: tetrate

tsb.tetrate.io/tenant: tier1

tsb.tetrate.io/workspace: tier1-ws

tsb.tetrate.io/gatewayGroup: tier1-gg

spec:

displayName: T1 Gateway

workloadSelector:

namespace: tier1

labels:

app: tier1-gateway

http:

- hostname: bookinfo.tetrate.io

port: 80

name: bookinfo-tetrate

routing:

rules:

- route:

clusterDestination: {}

Apply configuration using kubectl

kubectl apply -f gateway-config.yaml -n tier2

TopologyChoice as CLUSTER

Configure workspace settings with topologyChoice as CLUSTER first.

apiVersion: tsb.tetrate.io/v2

kind: WorkspaceSetting

metadata:

name: payment-wss

annotations:

tsb.tetrate.io/organization: tetrate

tsb.tetrate.io/tenant: payment

tsb.tetrate.io/workspace: payment-ws

spec:

defaultTrafficSetting:

inbound:

failoverSettings:

topologyChoice: CLUSTER

defaultEastWestGatewaySettings:

- workloadSelector:

namespace: payment

labels:

app: bookinfo-gateway

exposedServices:

- serviceLabels:

failover: enable

app: reviews

Apply configuration using kubectl

kubectl apply -f gateway-config.yaml -n tier2

Send some requests to Tier1 GW service endpoint in cp-cluster-3 and observe traffic in topology UI.

Export Tier1 Gateway Service IP

export GATEWAY_IP=$(kubectl -n tier1 get service tier1-gateway -o jsonpath='{.status.loadBalancer.ingress[0].ip}')

Send Request

curl http://bookinfo.tetrate.io/api/v1/products/1/reviews --resolve "bookinfo.tetrate.io:80:$GATEWAY_IP" -v

Topology

As you can see, traffic from productpage to reviews-v3 remains within the cluster, though EastWest Gateway is enabled and remote endpoints are available for the other cluster.

TopologyChoice as LOCALITY

Configure workspace settings with topologyChoice as LOCALITY this time.

apiVersion: tsb.tetrate.io/v2

kind: WorkspaceSetting

metadata:

name: payment-wss

annotations:

tsb.tetrate.io/organization: tetrate

tsb.tetrate.io/tenant: payment

tsb.tetrate.io/workspace: payment-ws

spec:

defaultTrafficSetting:

inbound:

failoverSettings:

topologyChoice: LOCALITY

defaultEastWestGatewaySettings:

- workloadSelector:

namespace: payment

labels:

app: bookinfo-gateway

exposedServices:

- serviceLabels:

failover: enable

app: reviews

Apply configuration using kubectl

kubectl apply -f gateway-config.yaml -n tier2

Send some requests to Tier1 GW service endpoint in cp-cluster-3 and observe traffic in topology UI.

Export Tier1 Gateway Service IP

export GATEWAY_IP=$(kubectl -n tier1 get service tier1-gateway -o jsonpath='{.status.loadBalancer.ingress[0].ip}')

Send Request

curl http://bookinfo.tetrate.io/api/v1/products/1/reviews --resolve "bookinfo.tetrate.io:80:$GATEWAY_IP" -v

Topology

As you can see, traffic from productpage to reviews-v3 distributed across clusters.

Failover

When reviews-v3 becomes unavailable in any of the clusters, it will failover to the other cluster irrespective of the value configured as topologyChoice