Edge Gateway Failover with Multi Cluster Weighted Traffic Distribution

This document serves as an extension to Priority-Based Multi-Cluster Traffic Routing and Failover. With the latest enhancements in TSB 1.12, users can now configure a custom failoverPriority order, allowing greater flexibility in determining how fallback clusters are selected during a failover.

This document provides detailed guidance on configuring failoverSettings to enable failovers between Tier 1 and Tier 2 Gateways spanning multiple regional clusters, based on a custom failover priority order.

Use case

Organizations operating across multiple geographic regions often deploy Tier 1 and Tier 2 Gateways across multiple regional clusters and different availability zones to ensure high availability and resilience. In a failover event, the default behavior may not align with business or operational priorities, leading to inefficient routing and potential service disruptions.

With TSB 1.12, users can define a custom failover priority order, ensuring that traffic is rerouted in a controlled and predictable manner. By leveraging failoverSettings, enterprises can:

- Prioritize specific clusters based on latency, capacity, or geographic proximity.

- Ensure seamless failover transitions between

Tier 1andTier 2Gateways. - Minimize downtime and optimize performance during regional outages or network disruptions.

This document provides a step-by-step configuration guide to implementing this feature effectively.

How to Configure FailoverPriority Settings?

FailoverPriority is an ordered list of labels used for priority-based load balancing, enabling traffic failover across different endpoint groups. These labels are matched on both the client and endpoints to determine endpoint priority dynamically. A label is considered for matching only if all preceding labels match; for example, an endpoint is assigned priority P(0) if all labels match, P(1) if the first n-1 labels match, and so on.

Failover Label Convention

To simplify configuration for end users, we have established the following failover label convention:

- Key Format:

failover.tetrate.io/<domain> - Value Format: Always set to

active(constant)

Defining Failover Priority on Client Gateway

The client gateway (GW) i.e Tier1 Gateway in this example, defines the priority order for failover, ensuring traffic is routed based on the specified domains. In the example below, domain1 has a higher priority than domain2:

failover.tetrate.io/domain1: active

failover.tetrate.io/domain2: active

Example: Tier1 Gateway Configuration

The following configuration demonstrates how to configure Tier1 gateway with failoverPriority settings:

apiVersion: gateway.tsb.tetrate.io/v2

kind: Gateway

metadata:

name: tier1-gateway

namespace: tier1

annotations:

tsb.tetrate.io/organization: tetrate

tsb.tetrate.io/tenant: tier1

tsb.tetrate.io/workspace: tier1

tsb.tetrate.io/gatewayGroup: tier1-gateway-group

spec:

workloadSelector:

namespace: tier1

labels:

app: tier1-gateway

http:

- hostname: bookinfo.tetrate.io

name: bookinfo

port: 80

failoverSettings:

failoverPriority:

- failover.tetrate.io/domain1=active

- failover.tetrate.io/domain2=active

routing:

rules:

- route:

clusterDestination:

clusters:

- name: c1

weight: 50

- name: c2

weight: 50

- name: c3

weight: 0

Annotating Tier2 Gateway Services with Failover Labels

To implement failover across multiple clusters, annotate Tier-2 (T2) gateway services in each cluster with the appropriate failover labels as shown in the table below:

| Cluster | Label Configuration | Failover Priority |

|---|---|---|

| Cluster 3 | failover.tetrate.io/domain1: active, failover.tetrate.io/domain2: active | 1st (highest priority) |

| Cluster 2 | failover.tetrate.io/domain1: active | 2nd priority |

| Cluster 1 | failover.tetrate.io/domain2: active | 3rd (least priority) |

Example: Tier2 Gateway Installation

The following configuration demonstrates how to annotate the T2 gateway in Cluster 3 with failover labels:

apiVersion: install.tetrate.io/v1alpha1

kind: Gateway

metadata:

name: bookinfo-gateway

namespace: payment

spec:

type: INGRESS

kubeSpec:

service:

type: LoadBalancer

labels:

failover.tetrate.io/domain1: active

failover.tetrate.io/domain2: active

Failover Selection Process

- Cluster 3 is selected first because it contains both

domain1anddomain2, fully matching the client GW's priority order. - Cluster 2 is selected second as it contains

domain1, which is ranked higher in the client GW’s list. - Cluster 1 is the last resort since it only contains

domain2, which has a lower priority.

By following this structured failover priority mechanism, traffic failover is executed efficiently, ensuring optimal availability and performance across multiple clusters.

TSB Setup & Configuration

In this guide, you are going to -

✓ Deploy bookinfo application distributed across multiple application clusters with an IngressGateway deployed on each.

✓ Deploy Gateway resource in one of the cluster as Tier1 Gateway to distribute the traffic to multiple application clusters configured as Tier2.

✓ Configure FailoverSettings in Tier1 Gateway to achieve a custom failover priority between the configured Tier 2 clusters.

Before you get started, make sure:

✓ TSB is up and running, and GitOps has been enabled for the target cluster

✓ Familiarize yourself with TSB concepts

✓ Completed TSB usage quickstart. This document assumes you are familiar with Tenant Workspace and Config Groups.

Setup

In this setup, we have provisioned 3 clusters i.e c1 and c2 and c3 in 3 different regions.

Deploy Bookinfo application

Create the namespace payment in c1, c2 & c3 and enable istio-injection: enabled.

kubectl create namespace payment

kubectl label namespace payment istio-injection=enabled --overwrite=true

kubectl apply -f https://docs.tetrate.io/examples/flagger/bookinfo.yaml -n payment

Create TSB configurations

Create TSB configurations Tenant, Workspace, Group for bookinfo

- tctl

- k8s/gitOps

apiVersion: api.tsb.tetrate.io/v2

kind: Tenant

metadata:

name: payment

organization: tetrate

spec:

displayName: Payment

---

apiVersion: api.tsb.tetrate.io/v2

kind: Workspace

metadata:

name: payment-ws

organization: tetrate

tenant: payment

spec:

displayName: payment-ws

namespaceSelector:

names:

- 'c1/payment'

- 'c2/payment'

- 'c3/payment'

---

apiVersion: traffic.tsb.tetrate.io/v2

kind: Group

metadata:

name: payment-tg

organization: tetrate

tenant: payment

workspace: payment-ws

spec:

displayName: Payment Traffic Group

namespaceSelector:

names:

- 'c1/payment'

- 'c2/payment'

- 'c3/payment'

configMode: BRIDGED

---

apiVersion: gateway.tsb.tetrate.io/v2

kind: Group

metadata:

name: bookinfo-gg

organization: tetrate

tenant: payment

workspace: payment-ws

spec:

displayName: Bookinfo Gateway Group

namespaceSelector:

names:

- 'c1/payment'

- 'c2/payment'

- 'c3/payment'

configMode: BRIDGED

---

apiVersion: gateway.tsb.tetrate.io/v2

kind: Gateway

metadata:

name: bookinfo-ingress-gateway

organization: tetrate

tenant: payment

workspace: payment-ws

gatewayGroup: bookinfo-gg

spec:

displayName: Bookinfo Ingress

workloadSelector:

namespace: payment

labels:

app: bookinfo-gateway

http:

- hostname: bookinfo.tetrate.io

name: bookinfo-tetrate

port: 80

routing:

rules:

- route:

serviceDestination:

host: payment/productpage.payment.svc.cluster.local

port: 9080

Apply configuration using kubectl

tctl apply -f bookinfo.yaml

apiVersion: tsb.tetrate.io/v2

kind: Tenant

metadata:

name: payment

annotations:

tsb.tetrate.io/organization: tetrate

spec:

displayName: Payment

---

apiVersion: tsb.tetrate.io/v2

kind: Workspace

metadata:

name: payment-ws

annotations:

tsb.tetrate.io/organization: tetrate

tsb.tetrate.io/tenant: payment

spec:

displayName: Payment Workspace

namespaceSelector:

names:

- 'c1/payment'

- 'c2/payment'

- 'c3/payment'

---

apiVersion: traffic.tsb.tetrate.io/v2

kind: Group

metadata:

name: payment-tg

annotations:

tsb.tetrate.io/organization: tetrate

tsb.tetrate.io/tenant: payment

tsb.tetrate.io/workspace: payment-ws

spec:

displayName: Payment Traffic Group

namespaceSelector:

names:

- 'c1/payment'

- 'c2/payment'

- 'c3/payment'

configMode: BRIDGED

---

apiVersion: gateway.tsb.tetrate.io/v2

kind: Group

metadata:

name: bookinfo-gg

namespace: bookinfo

annotations:

tsb.tetrate.io/organization: tetrate

tsb.tetrate.io/tenant: payment

tsb.tetrate.io/workspace: payment-ws

spec:

displayName: Bookinfo Gateway Group

namespaceSelector:

names:

- 'c1/payment'

- 'c2/payment'

- 'c3/payment'

configMode: BRIDGED

---

apiVersion: gateway.tsb.tetrate.io/v2

kind: Gateway

metadata:

name: bookinfo-ingress-gateway

annotations:

tsb.tetrate.io/organization: tetrate

tsb.tetrate.io/tenant: payment

tsb.tetrate.io/workspace: payment-ws

tsb.tetrate.io/gatewayGroup: bookinfo-gg

spec:

displayName: Bookinfo Ingress

workloadSelector:

namespace: payment

labels:

app: bookinfo-gateway

http:

- hostname: bookinfo.tetrate.io

name: bookinfo-tetrate

port: 80

routing:

rules:

- route:

serviceDestination:

host: payment/productpage.payment.svc.cluster.local

port: 9080

Apply configuration using kubectl

kubectl apply -f bookinfo.yaml -n payment

How FailoverPriority Labels are Configured for Tier2 Gateway

To configure failover priority labels for each Tier2 Gateway proxy endpoints, you have to label the Bookinfo Tier2 Gateway services as shown below

c1 cluster

apiVersion: install.tetrate.io/v1alpha1

kind: Gateway

metadata:

name: bookinfo-gateway

namespace: payment

spec:

type: INGRESS

kubeSpec:

service:

type: LoadBalancer

labels:

failover.tetrate.io/domain2: active

c2 cluster

apiVersion: install.tetrate.io/v1alpha1

kind: Gateway

metadata:

name: bookinfo-gateway

namespace: payment

spec:

type: INGRESS

kubeSpec:

service:

type: LoadBalancer

labels:

failover.tetrate.io/domain1: active

c3 cluster

apiVersion: install.tetrate.io/v1alpha1

kind: Gateway

metadata:

name: bookinfo-gateway

namespace: payment

spec:

type: INGRESS

kubeSpec:

service:

type: LoadBalancer

labels:

failover.tetrate.io/domain1: active

failover.tetrate.io/domain2: active

Apply configuration using kubectl in each clusters

kubectl apply -f gateway-install.yaml -n payment

How FailoverPriority Settings are Configured for Tier1 Gateway

Create Gateway as Tier1 Gateway to distribute the traffic to Tier2 Gateways deployed in c1, c2 & c3

- tctl

- k8s/gitOps

apiVersion: api.tsb.tetrate.io/v2

kind: Tenant

metadata:

name: tier1

organization: tetrate

spec:

displayName: Tier1

---

apiVersion: api.tsb.tetrate.io/v2

kind: Workspace

metadata:

name: tier1-ws

organization: tetrate

tenant: tier1

spec:

displayName: Tier1

namespaceSelector:

names:

- 'c3/tier1'

---

apiVersion: gateway.tsb.tetrate.io/v2

kind: Group

metadata:

name: tier1-gg

organization: tetrate

tenant: tier1

workspace: tier1-ws

spec:

displayName: Tier1 Gateway Group

namespaceSelector:

names:

- 'c3/tier1'

configMode: BRIDGED

---

apiVersion: gateway.tsb.tetrate.io/v2

kind: Gateway

metadata:

name: t1-gateway

organization: tetrate

tenant: tier1

workspace: tier1-ws

gatewayGroup: tier1-gg

spec:

displayName: T1 Gateway

workloadSelector:

namespace: tier1

labels:

app: tier1-gateway

http:

- hostname: bookinfo.tetrate.io

name: bookinfo

port: 80

failoverSettings:

failoverPriority:

- failover.tetrate.io/domain1=active

- failover.tetrate.io/domain2=active

routing:

rules:

- route:

clusterDestination:

clusters:

- name: c1

weight: 50

- name: c2

weight: 50

- name: c3

Apply configurations using tctl

tctl apply -f bookinfo.yaml

apiVersion: tsb.tetrate.io/v2

kind: Tenant

metadata:

name: tier1

annotations:

tsb.tetrate.io/organization: tetrate

spec:

displayName: Tier1

---

apiVersion: tsb.tetrate.io/v2

kind: Workspace

metadata:

name: tier1-ws

annotations:

tsb.tetrate.io/organization: tetrate

tsb.tetrate.io/tenant: tier1

spec:

displayName: Tier1

namespaceSelector:

names:

- 'c3/tier1'

---

apiVersion: gateway.tsb.tetrate.io/v2

kind: Group

metadata:

name: tier1-gg

annotations:

tsb.tetrate.io/organization: tetrate

tsb.tetrate.io/tenant: tier1

tsb.tetrate.io/workspace: tier1-ws

spec:

displayName: Tier1 Gateway Group

namespaceSelector:

names:

- 'c3/tier1'

configMode: BRIDGED

---

apiVersion: install.tetrate.io/v1alpha1

kind: Tier1Gateway

metadata:

name: tier1-gateway

namespace: tier1

spec:

type: INGRESS

kubeSpec:

service:

type: LoadBalancer

---

apiVersion: gateway.tsb.tetrate.io/v2

kind: Gateway

metadata:

name: t1-gateway

annotations:

tsb.tetrate.io/organization: tetrate

tsb.tetrate.io/tenant: tier1

tsb.tetrate.io/workspace: tier1-ws

tsb.tetrate.io/gatewayGroup: tier1-gg

spec:

displayName: T1 Gateway

workloadSelector:

namespace: tier1

labels:

app: tier1-gateway

http:

- hostname: bookinfo.tetrate.io

name: bookinfo

port: 80

failoverSettings:

failoverPriority:

- failover.tetrate.io/domain1=active

- failover.tetrate.io/domain2=active

routing:

rules:

- route:

clusterDestination:

clusters:

- name: c1

weight: 50

- name: c2

weight: 50

- name: c3

weight: 0

Apply configuration using kubectl

kubectl apply -f gateway-config.yaml -n tier1

Apply tier1 gateway install resource using kubectl

apiVersion: install.tetrate.io/v1alpha1

kind: Tier1Gateway

metadata:

name: tier1-gateway

namespace: tier1

spec:

type: INGRESS

kubeSpec:

service:

type: LoadBalancer

Apply configuration

kubectl apply -f tier1-install.yaml -n tier1

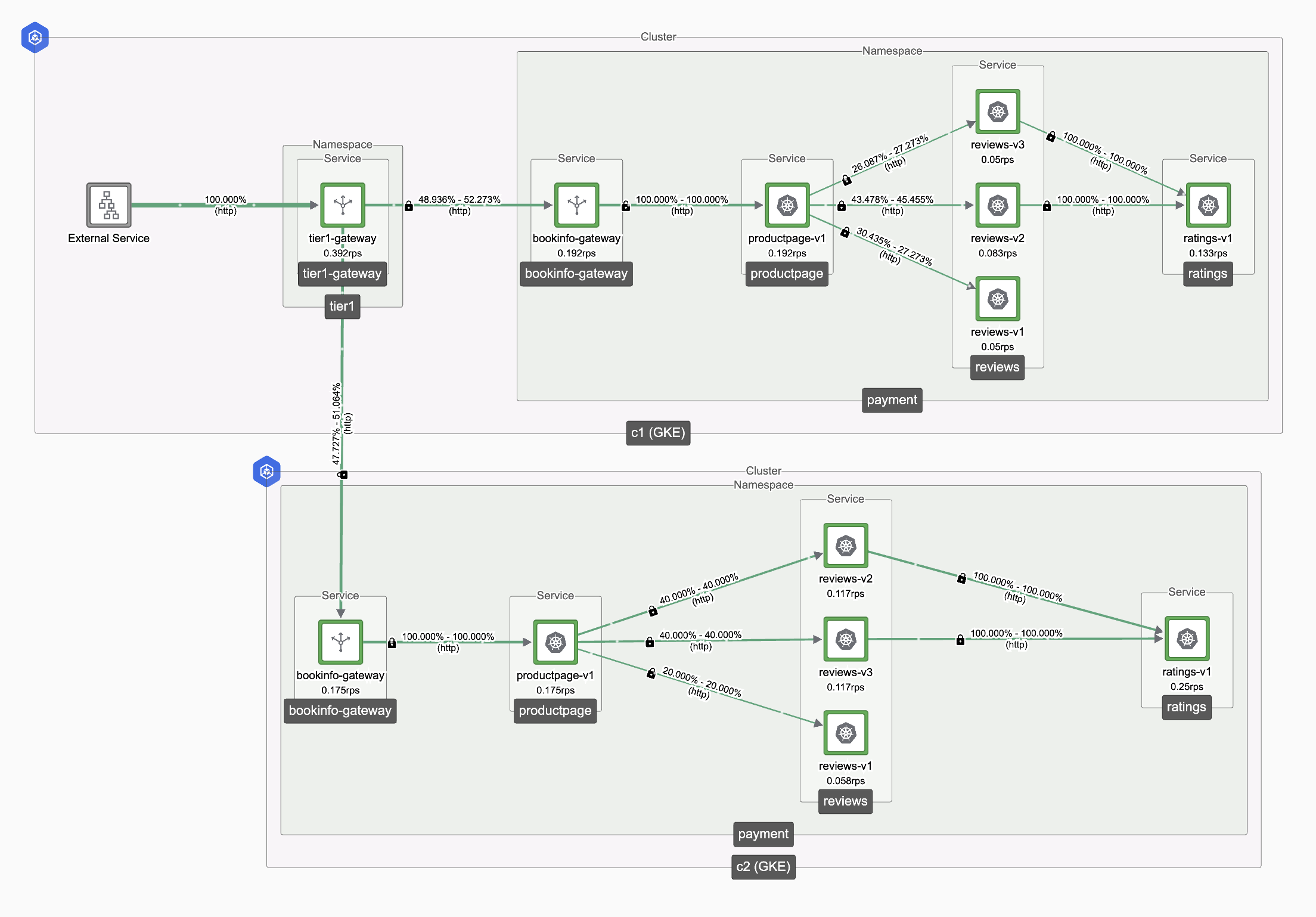

Default Behaviour

When the Tier-1 Gateway receives a request, it distributes traffic evenly between clusters c1 and c2 in a 50:50 ratio. Since the weight for c3 cluster is set to zero, the Tier-1 Gateway excludes it from the eligible candidate list under the default configuration.

Topology

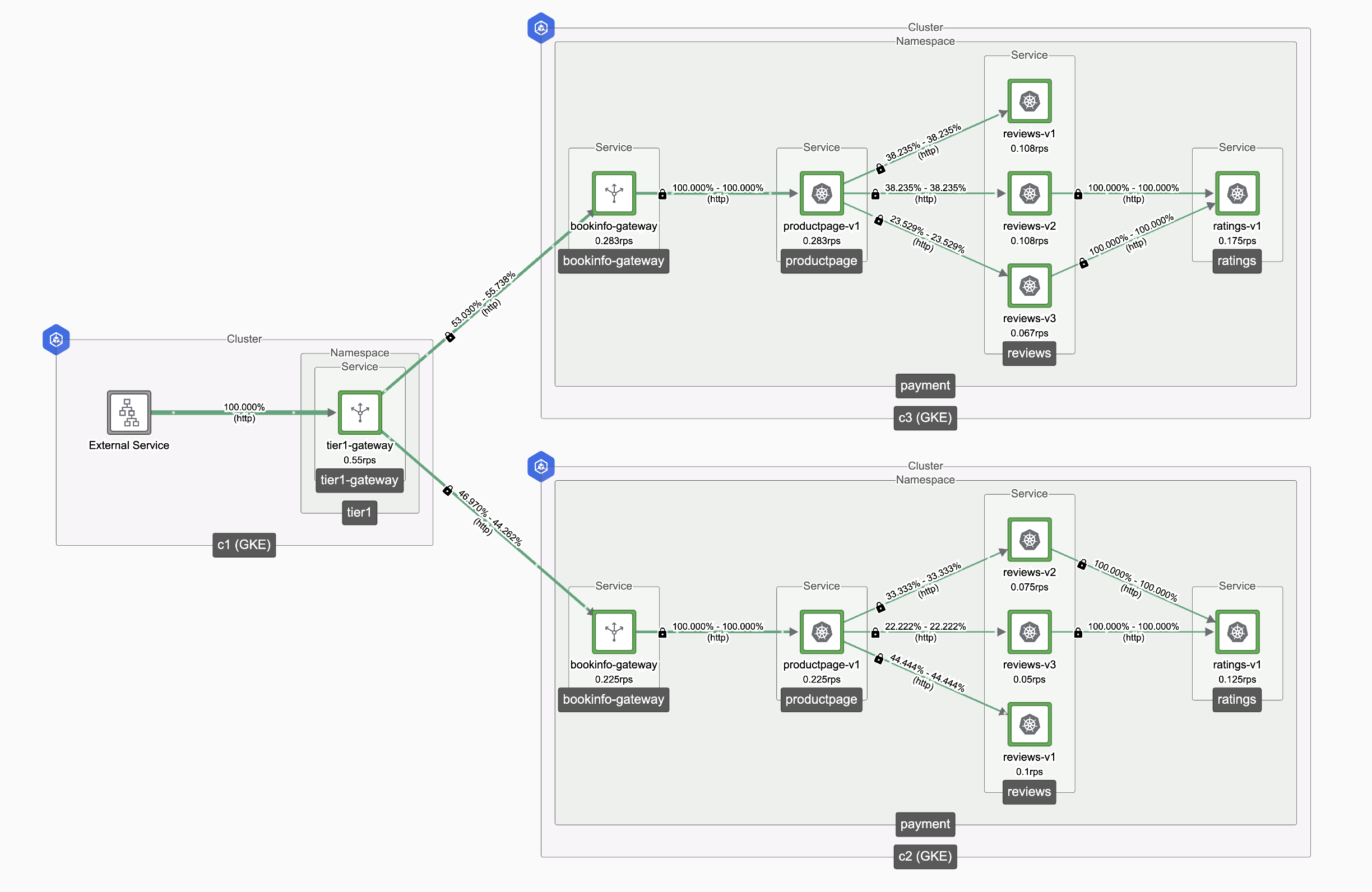

Trigger Failover

To simulate a failover, scale down the bookinfo-gateway deployment in Cluster 1 to zero and observe the traffic behavior:

kubectl scale --replicas=1 deployment bookinfo-gateway -n payment

Expected Behaviour

- Traffic that was originally routed through Cluster 1 should now fail over to Cluster 3.

- Cluster 2 should not experience any unexpected traffic increase.

- Cluster 3 will act as the fallback cluster for Cluster 1, ensuring seamless failover.

Topology

Restoring Traffic Flow

To revert to the original state, scale up the bookinfo-gateway deployment in Cluster 1 and observe the traffic behavior:

kubectl scale --replicas=1 deployment bookinfo-gateway -n payment

Expected Behaviour

- Traffic should now be routed back to the original setup.

- Requests should be distributed only between Cluster 1 (local) and Cluster 2 (remote).

- Cluster 3 should no longer receive any traffic, as it was only used as a fallback during the failover scenario.