Cross-Cluster Failover for Internal Services

With TSE, you can automatically failover services from one cluster to another

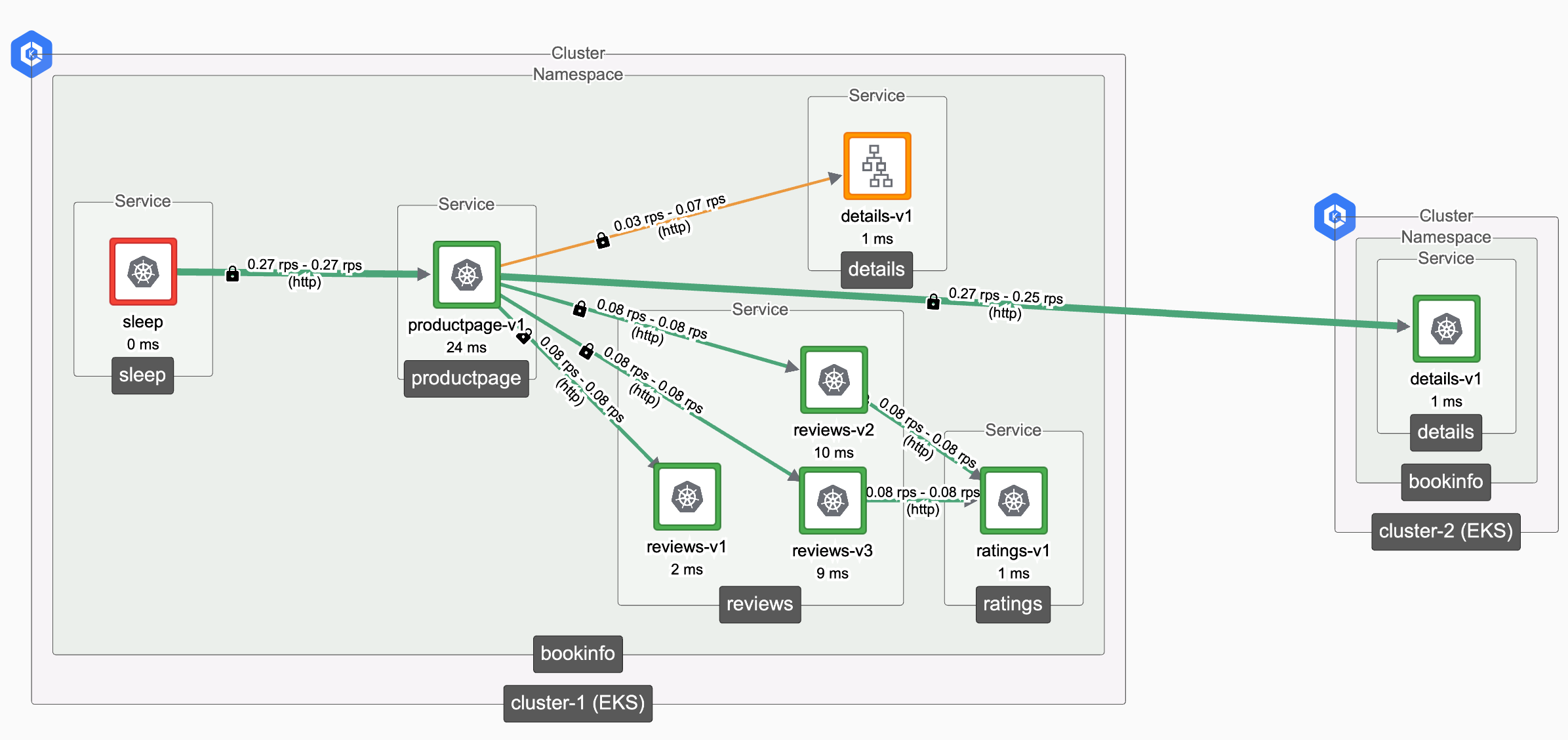

In the previous exercise, we saw how to perform cross-cluster communications, accessing a service in the primary cluster-1 from a client in the secondary cluster-2. In this exercise, we'll extend that implementation to perform failover for internal services.

Using an East-West Gateway to fail-over from one cluster to another Using an East-West Gateway to fail-over from one cluster to another |

|---|

- We'll begin with the bookinfo app installed in cluster-1. We'll provoke a failure in one of the internal services (details) for this app.

- We will then deploy the bookinfo app and an East-West Gateway in a secondary cluster cluster-2 and see how TSE can direct traffic to the failed service to the instance on the second cluster.

This exercise works most smoothly if cluster-2 is located in a different region. If that is not possible, an explanation at the end of this exercise explains how to tune the configuration.

Prerequisites

- You have completed the Cross-Cluster Communications exercise, and cleaned up the configuration:

- The primary cluster-1 cluster contains a bookinfo namespace with the Bookinfo app

- The secondary cluster-2 cluster is empty

Test and Configure Failover Between Clusters

Create the failure scenario

Test the Bookinfo app in cluster-1, and provoke a failure

Deploy a backup instance of Bookinfo in Cluster-2

Deploy the Bookinfo app in cluster-2, and an East-West gateway to expose it to other clusters

Re-test the failure scenario

Retest the failure scenario and observe traffic failover

Create the failure scenario

We are going to provoke a failure in the details service in the Bookinfo app and observe the results.

First, in cluster-1, deploy the sleep client:

kubectl apply -n bookinfo -f https://raw.githubusercontent.com/istio/istio/master/samples/sleep/sleep.yaml

Then, observe correct operation of the Bookinfo application:

kubectl exec deploy/sleep -n bookinfo -- curl -s productpage.bookinfo:9080/productpage | \

grep -i details -A 8

You'll see output resembling the following:

<h4 class="text-center text-primary">Book Details</h4>

<dl>

<dt>Type:</dt>paperback

<dt>Pages:</dt>200

<dt>Publisher:</dt>PublisherA

<dt>Language:</dt>English

<dt>ISBN-10:</dt>1234567890

<dt>ISBN-13:</dt>123-1234567890

</dl>

Now, provoke a failure by scaling the details service down to 0 instances:

kubectl scale deployment details-v1 -n bookinfo --replicas=0

Repeat the test above, and you should see an error generated by the productpage service:

<h4 class="text-center text-primary">Error fetching product details!</h4>

<p>Sorry, product details are currently unavailable for this book.</p>

...

Scale the details service back to 1 instance:

kubectl scale deployment details-v1 -n bookinfo --replicas=1

We can now provoke failure, and restore correct operation of the Bookinfo app.

Deploy a backup instance of Bookinfo in Cluster-2

We are going to:

- Deploy an East-West Gateway in secondary cluster-2

- Deploy Bookinfo in secondary cluster-2, exposing this into cluster-1

Deploy an East-West Gateway in cluster-2

First, create the bookinfo namespace in cluster-2 (if necessary). Run the following command against cluster-2:

kubectl create namespace bookinfo

kubectl label namespace bookinfo istio-injection=enabled

Deploy an East-West Gateway in cluster-2. Run the following command against cluster-2:

cat <<EOF > eastwest-gateway.yaml

apiVersion: install.tetrate.io/v1alpha1

kind: IngressGateway

metadata:

name: eastwest-gateway

namespace: bookinfo

spec:

eastWestOnly: true

EOF

kubectl apply -f eastwest-gateway.yaml

Deploy Bookinfo in cluster-2

Deploy Bookinfo in cluster-2. Run the following command against cluster-2:

kubectl apply -n bookinfo -f https://raw.githubusercontent.com/istio/istio/master/samples/bookinfo/platform/kube/bookinfo.yaml

Configure the Bookinfo workspace by adding a setting that exposes the Workspace's services on the East-West Gateway:

cat <<EOF > bookinfo-ws-eastwest.yaml

apiVersion: api.tsb.tetrate.io/v2

kind: WorkspaceSetting

metadata:

organization: tse

tenant: tse

workspace: bookinfo-ws

name: bookinfo-ws-setting

spec:

defaultEastWestGatewaySettings:

- workloadSelector:

namespace: bookinfo

labels:

app: eastwest-gateway

EOF

tctl apply -f bookinfo-ws-eastwest.yaml

In the failover use case, services are present in several clusters. In this use case, TSE creates WorkloadEntries for each shared service that is deployed in a cluster with an East-West gateway. You can inspect the WorkloadEntries in cluster-1:

kubectl get we -n bookinfo

When you deploy an IngressGateway resource, such as the eastwest-gateway, the AWS platform will provision a load balancer. This can take several minutes, and TSE cannot provision the WorkloadEntries until the load balancer is complete.

If kubectl get we -n bookinfo returns no entries, take a break and come back in a bit.

Workload entries in each cluster will resemble the following, for services present in both clusters:

NAME AGE ADDRESS

k-details-fc85310bf1f66c844cc9d9ec33b5046f 43s 18.133.97.238

k-details-fc85310bf1f66c844cc9d9ec33b5046f-2 43s 3.9.28.84

k-details-fc85310bf1f66c844cc9d9ec33b5046f-3 43s 35.176.13.117

k-productpage-7a9b8435b640d16465e85b2381f2a2ea 40s 18.133.97.238

k-productpage-7a9b8435b640d16465e85b2381f2a2ea-2 40s 3.9.28.84

k-productpage-7a9b8435b640d16465e85b2381f2a2ea-3 40s 35.176.13.117

k-ratings-434a643ce01332ab6d403244f24d87a9 43s 18.133.97.238

k-ratings-434a643ce01332ab6d403244f24d87a9-2 43s 3.9.28.84

k-ratings-434a643ce01332ab6d403244f24d87a9-3 43s 35.176.13.117

k-reviews-04c4ed3ee85bf78e1bbe83d489fb45ec 43s 18.133.97.238

k-reviews-04c4ed3ee85bf78e1bbe83d489fb45ec-2 43s 3.9.28.84

k-reviews-04c4ed3ee85bf78e1bbe83d489fb45ec-3 43s 35.176.13.117

Re-test the Failure Scenario

Run the following commands on cluster-1:

-

Verify that the Bookinfo app functions correctly:

kubectl exec deploy/sleep -n bookinfo -- curl -s productpage.bookinfo:9080/productpage | grep -i details -A 8 -

Provoke the failure in the local instance of the details service:

kubectl scale deployment details-v1 -n bookinfo --replicas=0 -

Retest the Bookinfo app and observe that it continues to function correctly:

kubectl exec deploy/sleep -n bookinfo -- curl -s productpage.bookinfo:9080/productpage | grep -i details -A 8

What if cluster-1 and cluster-2 are located in the same region?

When routing with an East-West gateway, services in the same region are considered to be identical. A client request may be routed to any of the clusters in the local region that offer the target service.

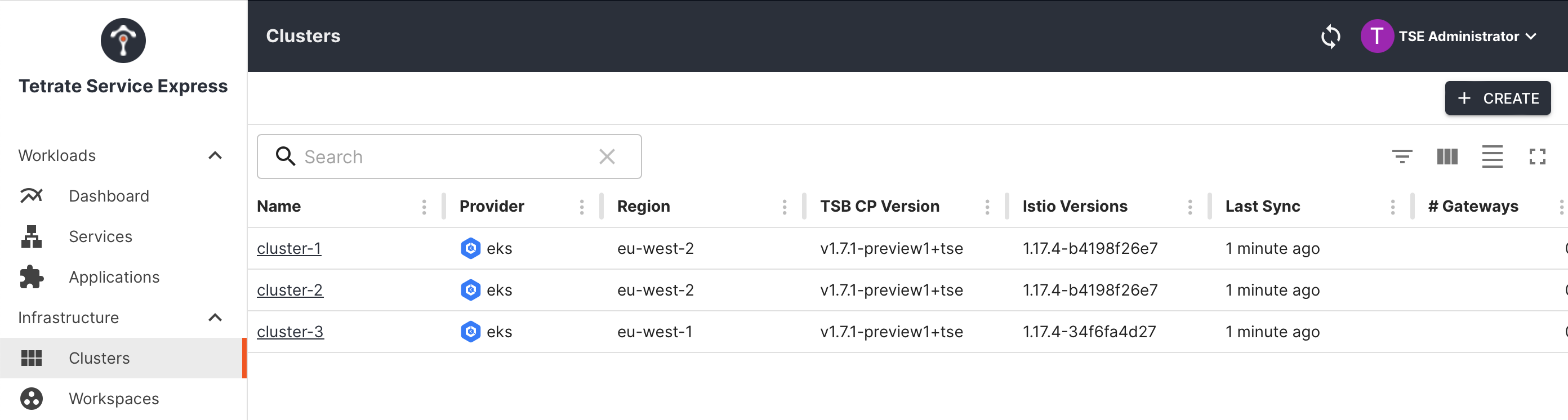

You can check the detected regions in the Clusters pane in the TSE user interface:

TSE managing three clusters, two in eu-west-2 and one in eu-west-1 TSE managing three clusters, two in eu-west-2 and one in eu-west-1 |

|---|

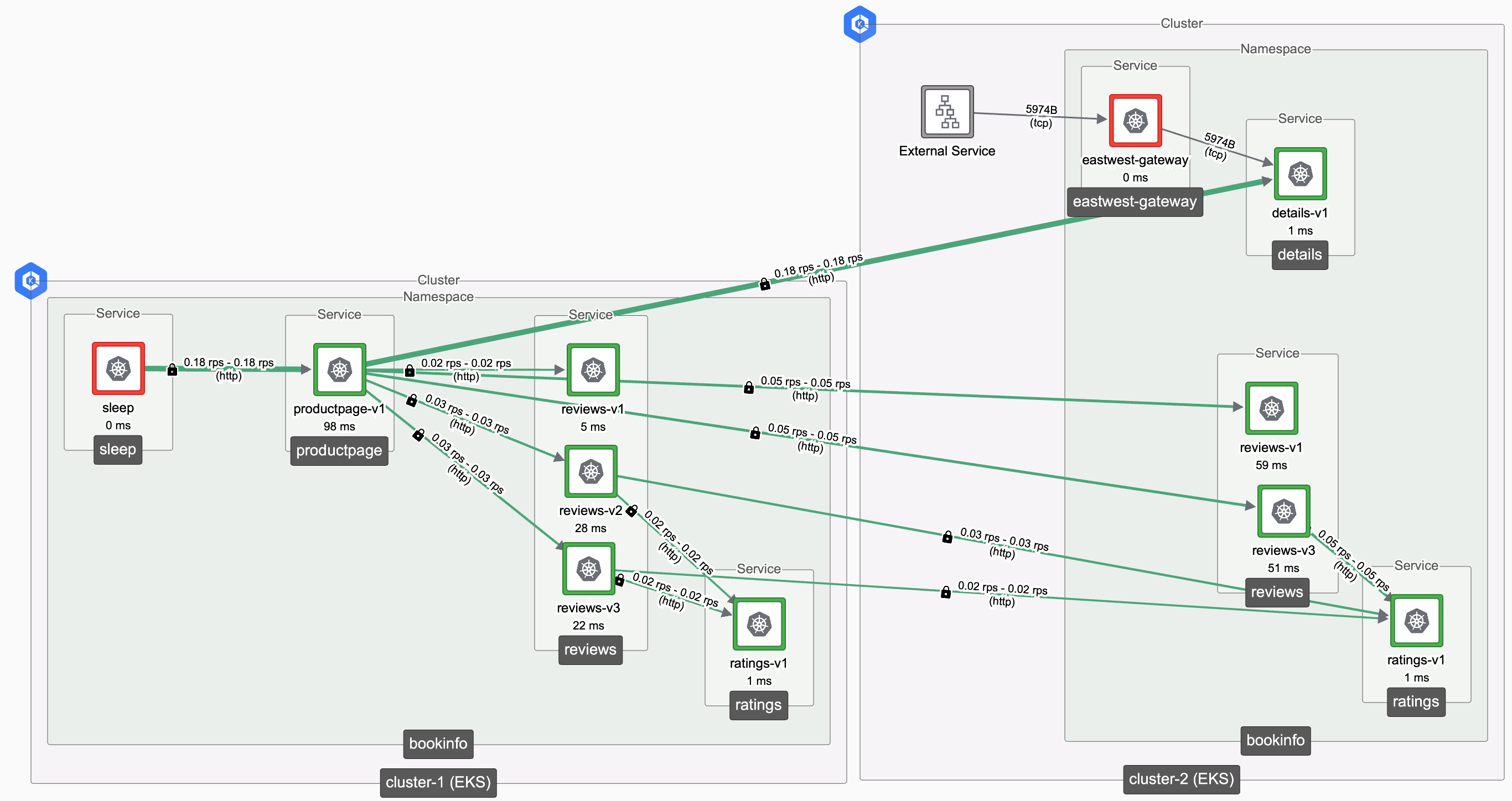

If the secondary cluster (the cluster with the East-West gateway) is located in the same region as the primary cluster, requests from services in the primary cluster may be routed to the secondary cluster, resulting in a topology chart similar to the following:

Requests from cluster-1 routed to services in cluster-2 via its East-West Gateway Requests from cluster-1 routed to services in cluster-2 via its East-West Gateway |

|---|

If this behavior is not desired, you can label the East-West Gateway in cluster-2 with a custom istio-locality value. The Istio routing layer will then determine that it is in a custom, non-local location. If you do this, requests will only be routed to cluster-2 if the service is not available in a local cluster.

Apply the label to the East-West Gateway using an overlay, as follows:

apiVersion: install.tetrate.io/v1alpha1

kind: IngressGateway

metadata:

name: eastwest-gateway

namespace: bookinfo

spec:

eastWestOnly: true

kubeSpec:

overlays:

- apiVersion: apps/v1

kind: Deployment

name: eastwest-gateway

patches:

- path: spec.template.metadata.labels.istio-locality

value: eu-remote-1

You will need to first delete the existing East-West gateway:

kubectl delete ingressgateway -n bookinfo eastwest-gateway

... and then re-create it using the extended yaml specification.

What have we achieved?

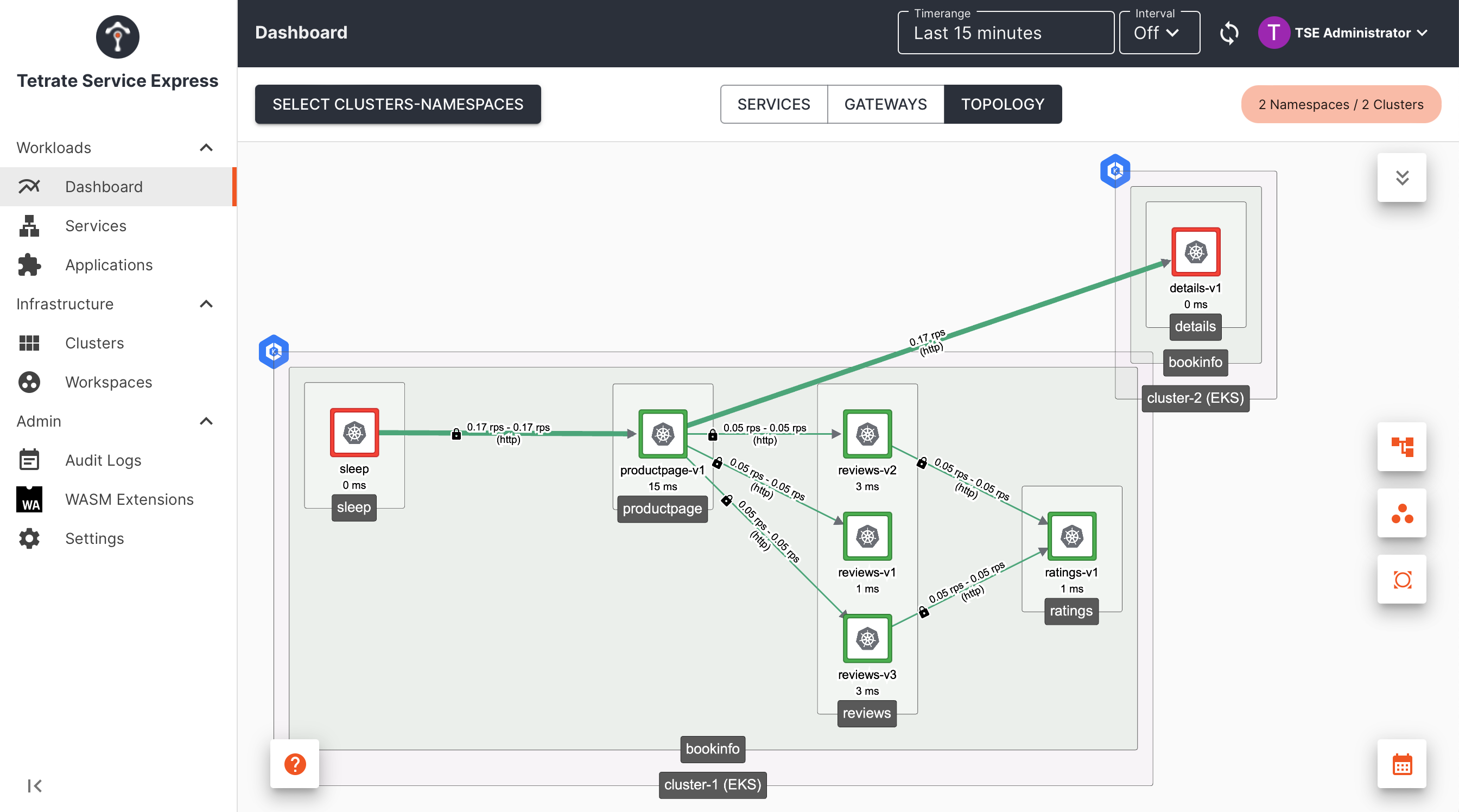

TSE Topology: failover for the details service TSE Topology: failover for the details service |

|---|

We have achieved high availability for internal services easily, without making any application modifications or exposing any services through an Ingress Gateway:

- We have deployed Bookinfo in two clusters

- We have failed-over an individual service from primary cluster-1 to secondary cluster-2

The Bookinfo app is now resilient to failure in any of its constituent services.

Network Reachability (optional)

In the previous exercise, you may have configured Network Reachability to declare that client cluster-2 to reach application cluster-1. If so, you need to create a rule to allow the reverse connectivity as well:

In the TSE UI:

- Go to Settings and Network Reachability

- Add a Reachability setting that allows cluster-1-network to reach cluster-2-network

Once set, TSE will then create the necessary WorkloadEntry resources.

Cleaning Up

You can remove the East-West Gateway and related services as follows:

On cluster-1, remove the sleep app and scale the details service if necessary:

kubectl delete -n bookinfo -f https://raw.githubusercontent.com/istio/istio/master/samples/sleep/sleep.yaml

kubectl scale deployment details-v1 -n bookinfo --replicas=1

On cluster-2, remove the eastwest-gateway, bookinfo app and bookinfo namespace:

kubectl delete ingressgateway -n bookinfo eastwest-gateway

kubectl delete -n bookinfo -f https://raw.githubusercontent.com/istio/istio/master/samples/bookinfo/platform/kube/bookinfo.yaml

kubectl delete namespace bookinfo