High-Availability with Route 53

TSE manages Route 53 Weighted Routing records to load-balance across locations

When services are exposed via an Ingress Gateway, Tetrate Service Express (TSE) can automatically trigger the creation (or update) of DNS entries. This process is described in the exercise Publish a Service exercise.

By default, TSE creates a Route 53 Simple Policy. This policy supports a single endpoint only.

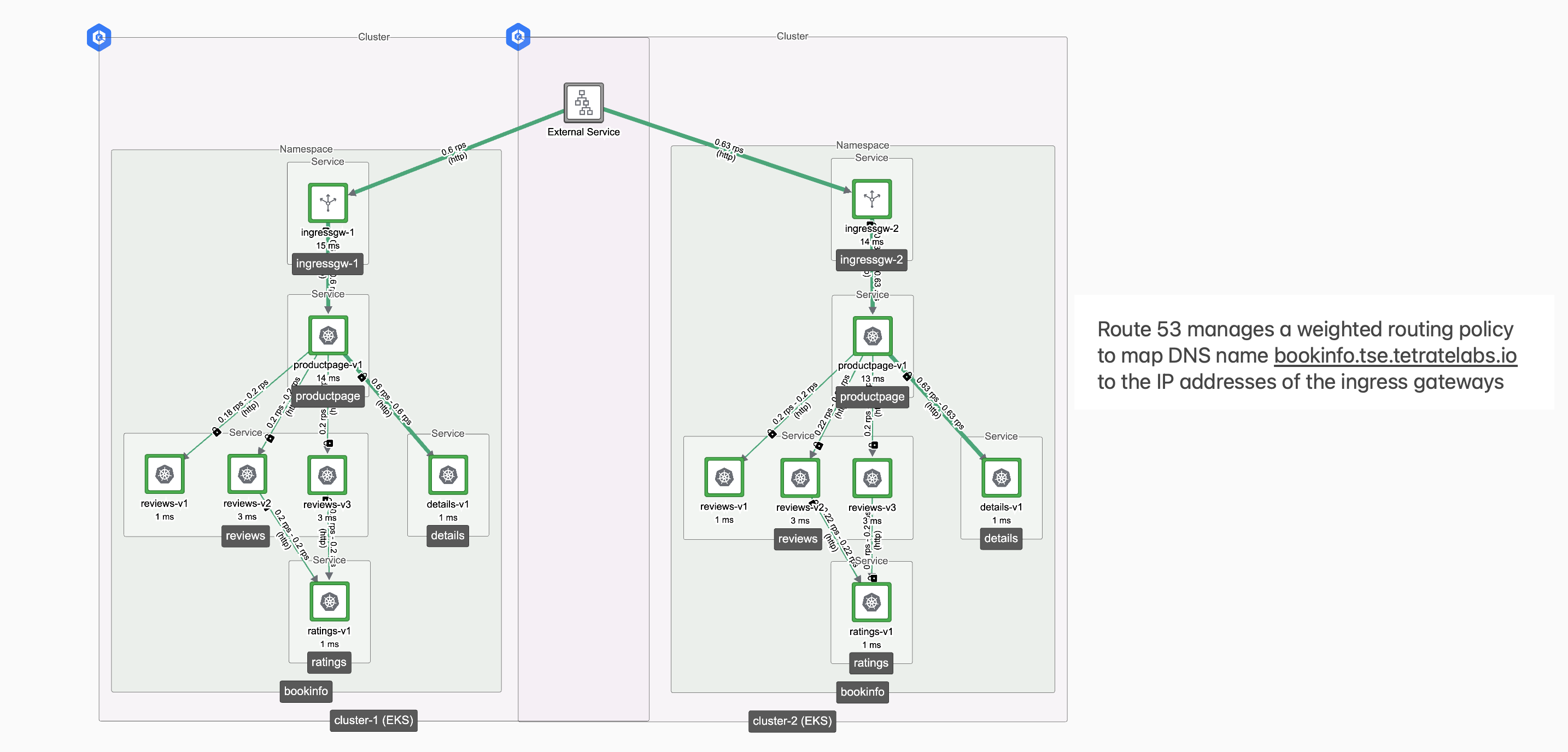

If you publish the same service from two or more locations, then TSE can manage a Route 53 Weighted Routing Policy to load balance traffic across these locations with a shared DNS name, and optionally perform health checks:

Two-cluster topology for bookinfo.tse.tetratelabs.io Two-cluster topology for bookinfo.tse.tetratelabs.io |

|---|

- We'll begin with the bookinfo app installed in cluster-1. We'll verify correct operation of TSE's AWS Controller.

- We will then deploy the bookinfo app in a secondary cluster cluster-2 and expose it with an Ingress Gateway. We'll observe how the AWS Controller creates a weighted load balancing configuration.

Prerequisites

- You have a test DNS name configured in your Route 53 account

- You are familiar with the Publish a Service exercise, and have configured TSE to manage the DNS using the AWS Controller

- You have two clusters onboarded into TSE, cluster-1 and cluster-2

Configure HA with TSE and Route 53

Enable the AWS Controller integration

If necessary, enable the AWS Controller integration with the desired hosted zone(s)

Deploy Bookinfo in a single cluster

Deploy the Bookinfo app in cluster-1

Expose Bookinfo from the cluster

Expose the Bookinfo app from cluster-1

Deploy and expose Bookinfo in a second cluster

Deploy the Bookinfo app in cluster-2 and observe the DNS updates that load-balance between the clusters

Enable the AWS Controller integration

This exercise uses the DNS subdomain tse.tetratelabs.io. The AWS Controller service is enabled in two TSE Workload clusters, cluster-1 and cluster-2.

Follow the instructions in the Route 53 Integration guide, or refer to the Publish a Service exercise. Perform these steps against both workload clusters, cluster-1 and cluster-2.

Deploy Bookinfo in a single cluster

Deploy bookinfo in cluster-1

If you've not already deployed bookinfo and created a Workspace, do so first:

kubectl create namespace bookinfo

kubectl label namespace bookinfo istio-injection=enabled

kubectl apply -n bookinfo -f https://raw.githubusercontent.com/istio/istio/master/samples/bookinfo/platform/kube/bookinfo.yaml

cat <<EOF > bookinfo-ws.yaml

apiversion: api.tsb.tetrate.io/v2

kind: Workspace

metadata:

organization: tse

tenant: tse

name: bookinfo-ws

spec:

namespaceSelector:

names:

- "*/bookinfo"

EOF

tctl apply -f bookinfo-ws.yaml

Note that the workspace is named bookinfo-ws and the namespace selector spans all clusters ("*/bookinfo").

Verify the service is operating in the cluster:

kubectl exec deploy/ratings-v1 -n bookinfo -- curl -s productpage:9080/productpage | grep -o "<title>.*</title>"

Expose Bookinfo from the cluster

Deploy an Ingress Gateway

Deploy an Ingress Gateway instance in cluster-1, in the bookinfo namespace:

cat <<EOF > ingress-gw-cluster-1.yaml

apiVersion: install.tetrate.io/v1alpha1

kind: IngressGateway

metadata:

name: ingress-gw-cluster-1

namespace: bookinfo

spec:

kubeSpec:

service:

type: LoadBalancer

EOF

kubectl apply -f ingress-gw-cluster-1.yaml

Create a Gateway Group

Create a TSE Gateway Group for gateway configuration in cluster-1 used by the Workspace:

cat <<EOF > bookinfo-gw-group-1.yaml

apiVersion: gateway.tsb.tetrate.io/v2

kind: Group

metadata:

displayName: bookinfo-gw-group-1

name: bookinfo-gwgroup-1

organization: tse

tenant: tse

workspace: bookinfo-ws

spec:

namespaceSelector:

names:

- "cluster-1/bookinfo"

EOF

tctl apply -f bookinfo-gw-group-1.yaml

Note that the Gateway Group is specific to cluster-1. This allows us to precisely define the network configuration for cluster-1 and later for cluster-2.

Expose the Service

Instruct TSE to expose the bookinfo/productpage service using the DNS name bookinfo.tse.tetratelabs.io:

cat <<EOF > bookinfo-ingress-cluster-1.yaml

apiVersion: gateway.tsb.tetrate.io/v2

kind: Gateway

metadata:

annotations:

route53.v1beta1.tetrate.io/aws-weight: "50"

route53.v1beta1.tetrate.io/set-identifier: cluster-1

organization: tse

tenant: tse

group: bookinfo-gwgroup-1

workspace: bookinfo-ws

name: bookinfo-ingress-1

spec:

workloadSelector:

namespace: bookinfo

labels:

app: ingress-gw-cluster-1

http:

- name: bookinfo

port: 80

hostname: bookinfo.tse.tetratelabs.io

routing:

rules:

- route:

serviceDestination:

host: bookinfo/productpage.bookinfo.svc.cluster.local

port: 9080

EOF

tctl apply -f bookinfo-ingress-cluster-1.yaml

Note the additional annotations needed to create the Route 53 Weighted Routing Policy.

You can follow the AWS Controller logs to observe the changes:

kubectl logs -f -n istio-system -l app=aws-controller

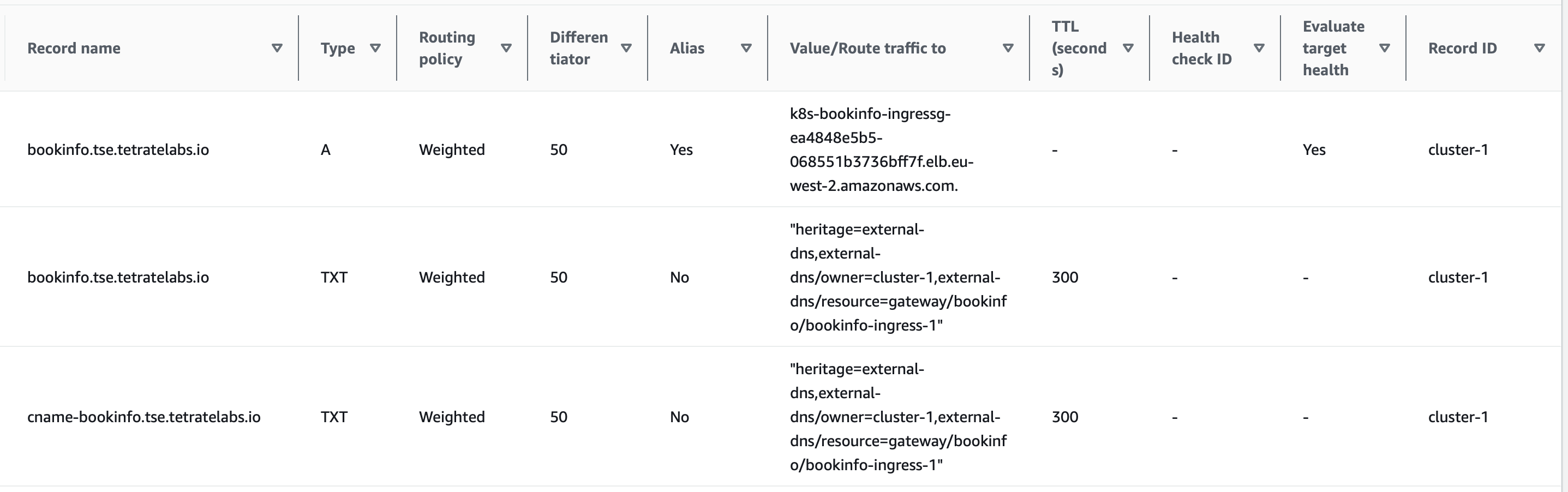

After a couple of minutes, you should see the DNS entries populated in your AWS Route 53 Console:

Route 53 Entries for bookinfo.tse.tetratelabs.io Route 53 Entries for bookinfo.tse.tetratelabs.io |

|---|

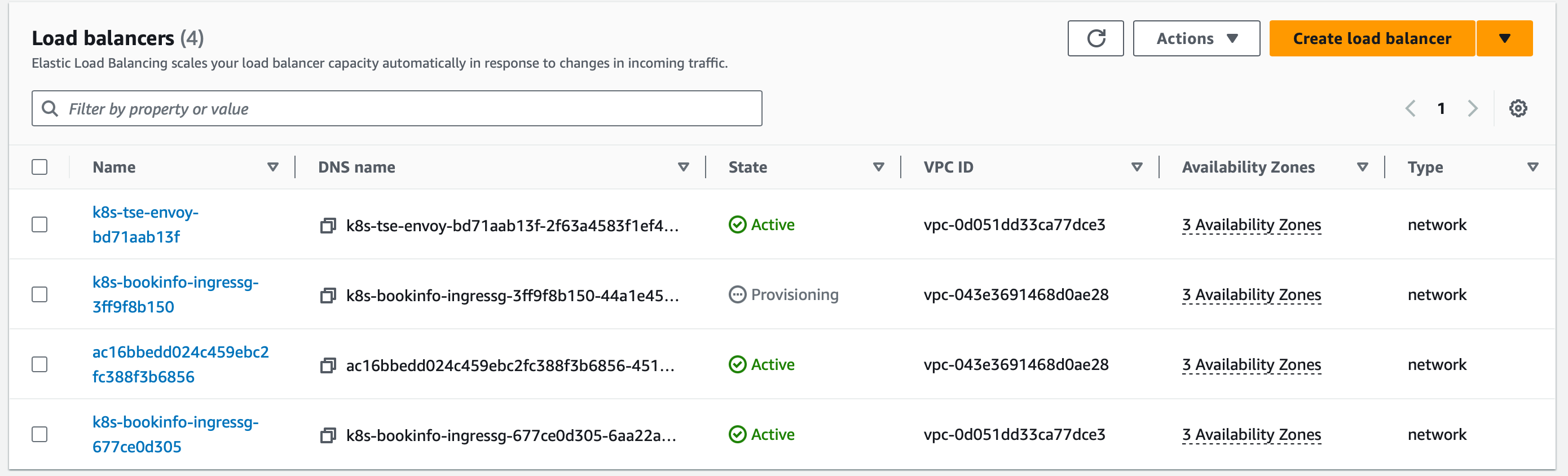

You may also need to wait for the newly-created Load Balancer to finish provisioning, which can take several minutes:

AWS Console: Load Balancer state AWS Console: Load Balancer state |

|---|

Once ready, you should be able to access the productpage service using the DNS name:

curl http://bookinfo.tse.tetratelabs.io/

What happened?

When you apply the Gateway configuration, two things happen:

- TSE configures the selected Ingress Gateway instance ingress-gw-cluster-1 to listen for traffic that matches the hostname and to load-balance it across local instances of the bookinfo/productpage service

- TSE's AWS Controller notices that a new DNS name is required and adds an entry for the hostname to a matching Hosted Zone (if one exists)

Note: AWS Controller defaults to a Simple Routing Policy. This is appropriate when a service is deployed from a single cluster. In this exercise, we want to use a Weighted Policy. It was necessary to add the following annotations to the IngressGateway for the service:

metadata:

annotations:

route53.v1beta1.tetrate.io/aws-weight: "50" # this annotation indicates that 'weighted' should be used

route53.v1beta1.tetrate.io/set-identifier: cluster-1 # the identifier should be unique for each cluster (target)

Deploy and expose Bookinfo in a second cluster

Once bookinfo is running and published from a single cluster, it's straightforward to deploy it in a second cluster and load-balance across both:

- Deploy bookinfo in the second cluster cluster-2

- Deploy an Ingress Gateway instance in cluster-2

- Create a Gateway Group to manage the gateway configuration in cluster-2

- Instruct TSE to expose the bookinfo/productpage service using the same DNS name

When using kubectl, make sure that the context points to the second cluster.

Deploy bookinfo in cluster-2

Deploy the application in cluster-2:

kubectl create namespace bookinfo

kubectl label namespace bookinfo istio-injection=enabled

kubectl apply -n bookinfo -f https://raw.githubusercontent.com/istio/istio/master/samples/bookinfo/platform/kube/bookinfo.yaml

Deploy an Ingress Gateway instance in cluster-2

Deploy an Ingress Gateway instance in cluster-2, in the bookinfo namespace:

cat <<EOF > ingress-gw-cluster-2.yaml

apiVersion: install.tetrate.io/v1alpha1

kind: IngressGateway

metadata:

name: ingress-gw-cluster-2

namespace: bookinfo

spec:

kubeSpec:

service:

type: LoadBalancer

EOF

kubectl apply -f ingress-gw-cluster-2.yaml

Create a Gateway Group

Create a TSE Gateway Group for gateway configuration in cluster-2 used by the Workspace:

cat <<EOF > bookinfo-gw-group-2.yaml

apiVersion: gateway.tsb.tetrate.io/v2

kind: Group

metadata:

displayName: bookinfo-gw-group-2

name: bookinfo-gwgroup-2

organization: tse

tenant: tse

workspace: bookinfo-ws

spec:

namespaceSelector:

names:

- "cluster-2/bookinfo"

EOF

tctl apply -f bookinfo-gw-group-2.yaml

Note that the Gateway Group bookinfo-gwgroup-2 is specific to cluster-2.

Instruct TSE to expose the bookinfo/productpage service using the same DNS name

Instruct TSE to expose the bookinfo/productpage service using the DNS name bookinfo.tse.tetratelabs.io:

cat <<EOF > bookinfo-ingress-cluster-2.yaml

apiVersion: gateway.tsb.tetrate.io/v2

kind: Gateway

metadata:

annotations:

route53.v1beta1.tetrate.io/aws-weight: "50"

route53.v1beta1.tetrate.io/set-identifier: cluster-2

organization: tse

tenant: tse

group: bookinfo-gwgroup-2

workspace: bookinfo-ws

name: bookinfo-ingress-2

spec:

workloadSelector:

namespace: bookinfo

labels:

app: ingress-gw-cluster-2

http:

- name: bookinfo

port: 80

hostname: bookinfo.tse.tetratelabs.io

routing:

rules:

- route:

serviceDestination:

host: bookinfo/productpage.bookinfo.svc.cluster.local

port: 9080

EOF

tctl apply -f bookinfo-ingress-cluster-2.yaml

When you apply the IngressGateway configuration TSE's AWS Controller adds a second weighted A record for the desired hostname. Route 53 will serve responses for both clusters, in a round-robin fashion.

Test the Configuration

You can select one of the authoritative name servers for the hosted zone bookinfo.tetratelabs.io; these are listed in the NS record for the zone. You can then query it directly, and you should see the responses alternating between the IP addresses used by each cluster:

dig A bookinfo.tse.tetratelabs.io @ns-1602.awsdns-08.co.uk | \

grep "^bookinfo.tse.tetratelabs.io." | cut -c 38- | sort | paste -sd ' ' -

The output should alternate between the addresses from each cluster.

What have we seen?

In summary:

- We deployed the bookinfo application into each cluster, using the same bookinfo namespace

- We deployed a TSE Workspace that spanned both clusters (*/bookinfo)

- For each cluster, we:

- Deployed an Ingress Gateway instance in the cluster, in the bookinfo namespace

- Created a Gateway Group to manage the gateway configuration

- Instructed TSE to expose the bookinfo/productpage service through the Ingress Gateway, using a shared DNS name

TSE configured Route 53 DNS appropriately to load-balance across each active cluster.

Further Configuration

Route 53 provides a range of methods (policies) to control the DNS entries served by the AWS nameservers. You can configure TSE's behavior in detail, using the AWS Provider Annotations supported by TSE.

High-Availability with Edge Gateways

For large-scale deployments, TSE also supports two-tier load balancing, with Edge Gateways to provide front-end load-balancing for multiple workload clusters.

HA two-tier load balancing may also require the GSLB solution described here to distribute traffic across the Edge Gateways.

Cleaning Up

You can remove the configuration created by this exercise. This action will also cause the AWS Controller to delete the DNS records it has created.

The following commands delete all configuration, including the bookinfo installs and all TSE configuration

On cluster-2:

kubectl delete ingressgateway -n bookinfo ingress-gw-cluster-2

kubectl delete -n bookinfo -f https://raw.githubusercontent.com/istio/istio/master/samples/bookinfo/platform/kube/bookinfo.yaml

kubectl delete namespace bookinfo

On cluster-1:

kubectl delete ingressgateway -n bookinfo ingress-gw-cluster-1

kubectl delete -n bookinfo -f https://raw.githubusercontent.com/istio/istio/master/samples/bookinfo/platform/kube/bookinfo.yaml

kubectl delete namespace bookinfo

Wait several seconds, then remove the TSE configuration:

tctl delete gwt --org tse --tenant tse --workspace bookinfo-ws --group bookinfo-gwgroup-1 bookinfo-ingress-1

tctl delete gwt --org tse --tenant tse --workspace bookinfo-ws --group bookinfo-gwgroup-2 bookinfo-ingress-2

tctl delete gg --org tse --tenant tse --workspace bookinfo-ws bookinfo-gwgroup-1

tctl delete gg --org tse --tenant tse --workspace bookinfo-ws bookinfo-gwgroup-2

tctl delete ws --org tse --tenant tse bookinfo-ws